Adding Resiliency to LNet

Introduction

By design LNet is a lossy connectionless network: there are cases where messages can be dropped without the sender being notified. Here we explore the possibilities of making LNet more resilient, including having it retransmit messages over alternate routes. What can be done in this area is constrained by the design of LNet.

In the following discussion, node will often be the shorthand for local node, while peer will be shorthand for peer node or remote node.

Put, Ack, Get, Reply

Within LNet there are three cases of interest: Put, Put+Ack, and Get+Reply.

- Put: The simplest message type is a bare Put message. This message is sent on the wire, and after that no further response is expected by LNet. In terms of error handling, this means that a failure to send can result in an error, but any kind of failure after the message has been sent will result in the message being dropped without notification. There is nothing LNet can do in the latter case, it will be up to the higher layers that use LNet to detect what is going on and take whatever corrective action is required.

- Put+Ack: The sender of a Put can ask from an Ack from the receiver. The Ack message is generated by LNet on the receiving node (the peer), and this is done as soon as the Put has been received. In contrast to a bare put this means LNet on the sender can track whether an Ack arrives, and if it does not promptly arrive it can take some action. Such an action can take two forms: inform the upper layers that the Ack took too long to arrive, or retry within LNet itself.

- Get+Reply: With a Get message, there is always a Reply from the received. Prior to the Get, the sender and receiver arrange an agreement on the MD that the data for the Reply must be obtained from, so LNet on the receiving node can generate the Reply message as soon as the Get has been received. Failure detection and handling is similar to the Put+Ack case.

The protocols used to implement LNet tend to be connection-oriented, and implement some kind of handshake or ack protocol that tells the sender that a message has been received. As long as the LND actually reports errors to LNet (not a given, alas) this means that in practice the sender of a message can reliably determine whether the message was successfully sent to another node. When the destination node is on the same LNet network, this is sufficient to enable LNet itself to detect failures even in the bare Put case. But in a routed configuration this only guarantees that the LNet router received the message, and if the LNet router then fails to forward it, a bare Put will be lost without trace.

PtlRPC

Users of LNet that send bare Put messages must implement their own methods to detect whether a message was lost. The general rule is simple: the recipient of a Put is supposed react somehow, and if the reaction doesn't happen within a set amount of time, the sender assumes that either the Put was lost, or the recipient is in some other kind of trouble.

For our purposes PtlRPC is of interest. PtlRPC messages can be classified as Request+Response pairs. Both a Request and a Response are built from one or more Get or Put messages. A node that sends a PtlRPC Request requires the receiver to send a Response within a set amount of time, and failing this the Request times out and PtlRPC takes corrective action.

Adaptive timeouts add an interesting wrinkle to this mechanism: they allow the recipient of a Request to tell the sender to "please wait", informing it that the recipient is alive and working but not able to send the Response before the normal timeout. For LNet the interesting implication is that while this is going on, there will be some traffic between the sender and recipient. However, this traffic may also be in the form of out-of-band information invisible to LNet.

LNet Interfaces

The interfaces that LNet provides to the upper layers should work as follows. Set up an MD (Memory Descriptor) to send data from (for a Put) or receive data into (for a Get). An event handler is associated with the MD. Then call LNetGet() or LNetPut() as appropriate.

LNetGet()

If all goes well, the event handler sees two events: LNET_EVENT_SEND to indicate the Get message was sent, and LNET_EVENT_REPLY to indicate the Reply message was received. Note that the send event can happen after the reply event (this is actually the typical case).

If sending the Get message failed, LNET_EVENT_SEND will include an error status, no LNET_EVENT_REPLY will happen, and clean up must done accordingly. If the return value of LNetGet() indicates an error then sending the message certainly failed, but a 0 return does not imply success, only that no failure has yet been encountered.

A damaged Reply message will be dropped, and does not result in an LNET_EVENT_REPLY. Effectively the only way for LNET_EVENT_REPLY to have an error status is if LNet detects a timeout before the Reply is received.

LNetPut() LNET_ACK_REQ

The caller of LNetPut() requests an Ack by using LNET_ACK_REQ as the value of the ack parameter.

A Put with an Ack is similar to a Get+Reply pair. The events in this case are LNET_EVENT_SEND and LNET_EVENT_ACK.

For a Put, the LNET_EVENT_SEND indicates that the MD is no longer used by the LNet code and the caller is free do discard or re-use it.

As with send, LNET_EVENT_ACK is expected to only carry an error indication if there was a timeout before the Ack was received.

LNetPut() LNET_NOACK_REQ

The caller of LNetPut() requests no Ack by using LNET_NOACK_REQ as the value of the ack parameter.

A Put without an Ack will only generate an LNET_EVENT_SEND, which indicates that the MD can now be re-used or discarded.

Possible Failures

There are a number of failures we can encounter, only some of which LNet may address.

- Node interface failure: there is some issue with the local interface that prevents it from sending (or receiving) messages.

- Peer interface failure: there is some issue with the remote interface that prevents it from receiving (or sending) messages.

- Path failure: the local interface is working properly, but messages never reach the peer interface.

- Soft peer failure: the peer is properly receiving messages but unresponsive for other reasons.

- Hard peer failure: the peer is down, and unresponsive on all its interfaces.

In a routed LNet configuration these scenarios apply to each hop.

These failures will show up in a number of ways:

- Node interface reports failure. This includes the interface itself being healthy but it noting that the cable connecting it to a switch, or the switch port, is somehow not working right.

- Peer interface not reachable. A peer interface that should be reachable from the node interface cannot be reached. Depending on the error this can result in "fast" error or a timeout in the LND-level protocol.

- Some peer interfaces on a net not reachable. The node interface appears to be OK, but there are interfaces several peers it cannot talk to.

- All peer interfaces on a net not reachable. The node interface appears to be OK, but cannot talk to any peer interface.

- All interfaces of a peer not reachable. All LNDs report errors when talking to a specific peer, but have no problem talking to other peers.

- Put+Ack or Get+Reply timeout. The LND gives no failure indication, but the Ack or Reply takes too long to arrive.

- Dropped Put. Everything appears to work, except it doesn't.

Let's take a look at what LNet on the node can do in each of these cases.

Node Interface Reports Failure

This is the easiest case to work with. The LND can report such a failure to LNet, and LNet then refrains from using this interface for any traffic.

LNet can mark the interface down, and depending on the capabilies of the LND either recheck periodically or wait for the LND to mark the interface up.

Peer Interface Not Reachable

The peer interface cannot be reached from the node interface, but the node interface can talk to other peers. If the peer interface can be reached from other node interfaces then we're dealing with some path failure. Otherwise the peer interface may be bad. If there is only a single node interface that can talk to the peer interface, then the node cannot distinguish between these cases.

LNet can mark this particular node/peer interface combination as something to be avoided.

When there are paths from more than one node interface to the peer interface, and none of these work, but other peer interfaces do work, then LNet can mark the peer interface as bad. Recovery could be done by periodically probing the peer interface, maybe using LNet Ping as a poor-man's equivalent of an LNet Control Packet.

Some Peer interfaces On A Net Not Reachable

Several peer interfaces on a net cannot be reached from a node interface, but the same node interface can talk to other peers. This is a more severe variant of the previous case.

All Peer Interfaces On A Net Not Reachable

All remote interfaces on a net cannot be reached from a local interface. If there are other, working, interfaces connected to the same net then the balance of probability shifts to the local interface being bad, or there is a severe problem with the fabric.

In practice LNet will not detect "all" remote interfaces being down. But it can detect that for a period of time, no traffic was successfully sent from a local interface, and therefore start avoiding that interface as a whole. Recovery would involve periodically probing the interface, maybe using LNet Ping.

All Interfaces Of A Peer Not Reachable

The node is likely down. There is little LNet can do here, this is a problem to be handled by upper layers. This includes indicating when LNet should attempt to reconnect.

LNet might treat this as the "remote interface not reachable" case for all the interfaces of the remote node. That is, without much difference due to apparently all interfaces of the remote node being down, except for a log message indicating this.

Put+Ack Or Get+Reply Timeout

This is the case where the LND does not signal any problem, so the Ack for a Put or Reply for a Get should arrive promptly, with the only delays due to credit-based throttling, and yet it does not do so. Note that this assumes that were possible the LND layer already implements reasonably tight timeouts, so that LNet can assume the problem is somewhere else.

LNet can impose a "reply timeout", and retry over a different path if there is one available. However, if the assumption about the LND is valid, then the implication is that the node is in trouble. So an alternative is to force the upper layers to cope.

One argument for nevertheless implementing this facility in LNet is that it means the upper layers to have to re-invent and re-implement this wheel time and again.

Dropped Put

No problem was signalled by the LND, and there is no Ack that we could time out waiting for. LNet does not have enough information to do anything, so the upper layers must do so instead.

If this case must be made tractable, LNet can be changed to make the Ack non-optional.

Should LNet Resend Messages

When there are multiple paths available for a message, it makes sense to try to resend it on failure. But where should the resending logic be implemented?

The easiest path is to tell upper layers to resend. For example, PtlRPC has some related logic already. Except that when PtlRPC detects a failure, it disconnects, reconnects, and triggers a recovery operation. This is a fairly heavy-weight process, while the type of resending logic desired is to "just try another path" which differs from what exists today and needs to be implemented for each user.

The alternative then is to have LNet resend a message. There should be some limit to the number of retries, and a limit to the amount of time spent retrying. Otherwise we are requiring the upper layers to implement a timer on each LNetGet() and LNetPut() call to guarantee progress. This introduces an LNet "retry timeout" (as opposed to the "reply timeout" discussed above) as the amount of time LNet after which LNet gives up.

In terms of timeouts, this then gives us the following relationships, from shortest to longest:

- LND Timeout: LND declares that a message won't arrive.

- IB timeout is (default?) slightly less than 4 seconds

- LND timeout is

timeoutmodule parameter foro2ibandgni,sock_timeoutmodule parameter forsock?

- LNet Reply Timeout: LNet declares an Ack/Reply won't arrive. > LND Timeout * (max hops -1)

- Depends on the route!

- LNet Retry Timeout: LNet gives up on retries. > LNet Reply Timeout * max LNet retries

- Depends on the route!

peer_timeoutmodule parameter: peer is declared dead. Either use for LNet Retry Timeout, or > LNet Retry Timeout. Using thepeer_timeoutfor the LNet Retry Timeout has the advantage of reducing the number of tunable parameters. A disadvantage is that thepeer_timeoutis currently a per-LND parameter (each LND has its own tunable value), effectively limiting the number of retries to 1 when the LND timed out.

It is not completely obvious how this scheme interacts with Lustre's timeout parameter (the Lustre RPC timeout, from which a number of timeouts are derived), but at first glance it seems that at least peer_timeout < Lustre timeout.

LNet Health Version 2.0

There are three types of failures that LNet needs to deal with:

- Local Interface failure

- Remote Interface failure

- Timeouts

- LND detected Timeout

- LNet detected Timeout

Local Interface Failure

Local interface failures will be detected in one of two ways

- Synchronously as a return failure to the call to

lnd_send() - Asynchronously as an event that could be detected at a later point.

- These asynchronous events can be as a result of a send operations

- They can also be independent of send operations, as failures are detected with the underlying device, for example a "link down" event.

Synchronous Send Failures

lnet_select_pathway can fail for the following reasons:

- Shutdown in progress

- Out of memory

- Interrup signal received

- Discovery error.

- An MD bind failure

-EINVAL-HOSTUNREACH

Invalid information given

- Message dropped

- Aborting message

- no route found

- Internal failure

All these cases are resource errors and it does not make sense to resend the message as any resend will likely run into the same problem.

Asynchronous Send Failures

LNet should resend the message:

- On LND transmit timeout

- On LND connection failure

- On LND send failure

When there is a message send failure due to the reasons outlined above. The behavior should be as follows:

- The local or remote interface health is updated

- Failure statistics incremented

- A resend is issued on a different local interface if there is one available. If there is not one available attempt the same interface again.

- The message will continuously be resent until the timeout expires or the send succeeds.

A new field in the msg, msg_status, will be added. This field will hold the send status of the message.

When a message encounters one of the errors above, the LND will update the msg_status field appropriately and call lnet_finalize()

lnet_finalize() will check if the message has timed out or if it needs to be resent and will take action on it. lnet_finalize() currently calls lnet_complete_msg_locked() to continue the processing. If the message has not been sent, then lnet_finalize() should call another function to resend, lnet_resend_msg_locked().

When a message is initially sent it's taged with a deadline for this message. The deadline is the current time + peer_timeout. While the message has not timedout it will be resent if it needs to. The deadline is checked everytime we enter lnet_finalize(). When the deadline is reached without successful send, then the MD will be detached.

While the message is in the sending state the MD will not be detached.

lnet_finalize() will also update the statistics (or call a function to update the statistics).

Resending

It is possible that a send can fail immediately. In this case we need to take active measures to ensure that we do not enter a tight loop resending until the timeout expires. This could peak the CPU consumption unexpectedly.

To do that the last sent time will be kept in the message. If the message is not sent successfully on any of the existing interfaces, then it will be placed on a queue and will be resent after a specific deadline expires. This will be termed a "(re)send procedure". An interval must expire between each (re)send procedure. A (re)send procedure will iterate through all local and remote peers, depending on the source of the send failure.

The router pinger thread will be refactored to handle resending messages. The router pinger thread is started irregardless and only used to ping gateways if any are configured. Its operation will be expanded to check the pending message queue and re-send messages.

To keep the code simple when resending the health value of the interface that had the problem will be updated. If we are sending to a non-MR peer, then we will use the same src_nid, otherwise the peer will be confused. The selection algorithm will then consider the health value when it is selecting the NI or peer NI.

There are two aspects to consider when sending a message

- The congestion of the local and remote interfaces

- The health of the local and remote interfaces

The selection algorithm will take an average of these two values and will determine the best interface to select. To make the comparison proper, the health value of the interface will be set to the same value as the credits on initialization. It will be decremented on failure to send and incremented on successful send.

A health_value_range module parameter will be added to control the sensitiveness of the selection. If it is set to 0, then the best interface will be selected. A value higher than 0 will give a range in which to select the interface. If the value is large enough it will in effect be equivalent to turning off health comparison.

Hard Failure

It's possible that the local interface might get into a hard failure scenario by receiving one of these events from the o2iblnd. socklnd needs to be investigated to determine if there are similar cases:

- IB_EVENT_DEVICE_FATAL

- IB_EVENT_PORT_ACTIVE

- IB_EVENT_PORT_ERR

- RDMA_CM_EVENT_DEVICE_REMOVAL

In these cases the local interface can not be used any longer. So it can not be selected as part of the selection algorithm. If there are no other interface available, then no messages can be sent out of the node.

A corresponding event can be received to indicate that the interface is operational again.

A new LNet/LND Api will be created to pass these events from the LND to LNet.

Timeouts

LND Detected Timeouts

Upper layers request from LNet to send a GET or a PUT via LNetGet() and LNetPut() APIs. LNet then calls into the LND to complete the operation. The LND encapsulates the LNet message into an LND specific message with its own message type. For example in the o2iblnd it is kib_msg_t.

When the LND transmits the LND message it sets a tx_deadline for that particular transmit. This tx_deadline remains active until the remote has confirmed receipt of the message. Receipt of the message at the remote is when LNet is informed that a message has been received by the LND, done via lnet_parse(), then LNet calls back into the LND layer to receive the message.

Therefore if a tx_deadline is hit, it is safe to assume that the remote end has not received the message. The reasons are described further below.

By handling the tx_deadline properly we are able to account for almost all next-hop failures. LNet would've done its best to ensure that a message has arrived at the immediate next hop.

The tx_deadline is LND-specific, and derived from the timeout (or sock_timeout) module parameter of the LND.

LNet Detected Timeouts

As mentioned above at the LNet layer LNET_MSG_PUT can be told to expect LNET_MSG_ACK to confirm that the LNET_MSG_PUT has been processed by the destination. Similarly LNET_MSG_GET expects an LNET_MSG_REPLY to confirm that the LNET_MSG_GET has been successfully processed by the destination.

The pair LNET_MSG_PUT+LNET_MSG_ACK and LNET_MSG_GET+LNET_MSG_REPLY is not covered by the tx_deadline in the LND. If the upper layer does not take precautions it could wait forever on the LNET_MSG_ACK or LNET_MSG_REPLY. Therefore it is reasonable to expect that LNET provides a TIMEOUT event if either of these messages are not received within the expected timeout.

The question is whether LNet should resend the LNET_MSG_PUT or LNET_MSG_GET if it doesn't receive the corresponding response.

Consider the case where there are multiple LNet routers between two nodes, N1 and N2. These routers can possibly be routing between different Hardware, example OPA and MLX. N1 via the LND can reliably determine the health of the next-hop's interfaces. It can not however reliably determine the health of further hops in the chain. Each node can determine the health of the immediate next-hops. Therefore, each node in the path can be trusted to ensure that the message has arrived at the immediate next hop.

If there is a failure along the path and N1 does not receive the expected LNET_MSG_ACK or LNET_MSG_REPLY, and it knows that the message has been received by its next-hop, it has no way to determine where the failure happened. If it decides to resend the message, then there is no way to reliably select a reasonable peer_ni. Especially considering that the message has in fact been received properly by the next-hop. We can then say that we will simply try all the peer_nis of the destination. But in fact this will already be done by the node in the chain which is encountering a problem completing the message with its next-hop. So the net effect is the same. If both are implemented, then duplication of messages is a certainty.

Furthermore the responsibility of end-to-end reliability falls on the shoulder of layers using LNet. Ptlrpc's design clearly takes the end-to-end reliability of RPCs in consideration. By adding an LNET_ACK_TIMEOUT and LNET_REPLY_TIMEOUT (or add an error status in the current events), then ptlrpc can react to the error status appropriately.

The argument against this approach is mixed clusters, where not all nodes are MR capable. In this case we can not rely on intermediary nodes to try all the interfaces of its next-hop. However, as is assumed in the Multi-Rail design if not all nodes are MR capable, then not all Multi-Rail features are expected to work.

This appraoch would add the LNet resiliency required and avoid the many corner cases that will need to be addressed when receiving message which have already been processed.

Relationship between different timeouts.

There are multiple timeouts kept at different layers of the code. It is important to set the timeout defaults such that it works best, and to give guidance on how the different timeouts interact together.

Looking at timeouts from a bottom up approach:

- IB/TCP/GNI re-send timeout

- LND transmit timeout

- The timeout to wait for before a transmit fails and

lnet_finalize()is called with an appropriate error code. This will result in a resend.

- The timeout to wait for before a transmit fails and

- Transaction timeout

- timeout after which LNet sends a timeout event for a missing REPLY/ACK.

- Message timeout

- timeout after which LNet abandons resending a message.

- Resend interval

- The interval between each (re)send procedure.

- RPC timeout

- The

INITIAL_CONNECT_TIMEOUTis set to 5 sec - ldlm_timeout and obd_timeout are tunables and default to

LDLM_TIMEOUT_DEFAULTandOBD_TIMEOUT_DEFAULT.

- The

IB/TCP/GNI re-send timeout < LND transmit timeout < RPC timeout.

A retry count can be specified. That's the number of times to resend after the LND transmit timeout expires.

The timeout value before failing an LNET_MSG_[PUT | GET] will be:

message timeout = (retry count * LND transmit timeout) + (resend interval * retry count)

where

retry count = min(retry count, 5)

message timeout <= transaction timeout

It has been observed that mount could hang for a long time if discovery ping is not responded to. This could happen if an OST is down while a client mounts the File System. In this case it does not make sense to hold up the mount procedure while discovery is taking place. For some cases like discovery the algorithm would specify a different timeout other than what's configured.

Other cases where a timeout can be specified which overrides the configured timeout is router ping and manual ping.

One issue to consider is currently the LND transmit timeout defaults to 50s. So if we do retry up to five times we could be held up for 2500s, which would be unacceptable.

The question to answer is, does it make sense for the LND transmit timeout to be set to 50s? Even though the IB/TCP/GNI timeout can be long, it might make more sense to pre-empt that communication stack and attempt to resend the message from the LNet layer on a different interface, or even reuse the same interface if only on is available.

Resiliency vs. Reliability

There are two concepts that need to stay separate. Reliability of RPC messages and LNet Resiliency. This feature attempts to add LNet Resiliency against local and immediate next hop interface failure. End-to-end reliability is to ensure that upper layer messages, namely RPC messages, are received and processed by the final destination, and take appropriate action in case this does not happen. End-to-end reliability is the responsibility of the application that uses LNet, in this case ptlrpc. Ptlrpc already has a mechanism to ensure this.

To clarify the terminology further, LNET MESSAGE should be used to describe one of the following messages:

- LNET_MSG_PUT

- LNET_MSG_GET

- LNET_MSG_ACK

- LNET_MSG_GET

- LNET_MSG_HELLO

LNET TRANSACTION should be used to describe

- LNET_MSG_PUT, LNET_MSG_ACK sequence

- LNET_MSG_GET, LNET_MSG_REPLY sequence

NEXT-HOP should describe a peer that is exactly one hop away.

The role of LNet is to ensure that an LNET MESSAGE arrives at the NEXT-HOP, and to flag when a transaction fails to complete.

Upper layers should ensure that the transaction it requests to initiate completes successfully, and take appropriate action otherwise.

Reasons for timeout

The discussion here refers to the LND Transmit timeout.

Timeouts could occur due to several reasons:

- The message is on the sender's queue and is not posted within the timeout

- This indicates that the local interface is too busy and is unable to process the messages on its queue.

- The message is posted but the transmit is never completed

- An actual culprit can not be determined in this scenario. It could be a sender issue, a receiver issue or a network issue.

- The message is posted, the transmit is completed, but the remote never acknowledges.

- In the IBLND, there are explicit acknowledgements in most cases when the message is received and forwarded to the LNet layer. Look below for more details.

- If an LND message is in waiting state and it didn't receive the expected response, then this indicates an issue at the remote's LND, either at the lower protocol, IB/TCP, or the notification at the LNet layer is not being processed in a timely fashion.

Each of these scenarios can be handled differently

Desired Behavior

The desired behavior is listed for each of the above scenarios:

Scenario 1 - Message not posted

- Connection is closed

- The local interface health is updated

- Failure statistics incremented

- A resend is issued on a different local interface if there is one available.

- If no other local interface is present, or all are in failed mode, then the send fails.

Scenario 2 - Transmit not completed

- Connection is closed

- The local and remote interface health is updated

- Failure statistics incremented on both local and remote

- A resend is issued on a different path all together if there is one available.

- If no other path is present then the send fails.

Scenario 3 - No acknowledgement by remote

- Connection is closed

- The remote interface health is updated

- Failure statistics incremented

- A resend is issued on a different remote interface if there is one available.

- If no other remote interface is present then the send fails.

Note, that the behavior outlined is consistent with the explcit error cases identified in previous section. Only Scenario 2, diverges as a different path is selected all together, but still the same code structure is used.

Implementation Specifics

All of these cases should end up calling lnet_finalize() API with the proper return code. lnet_finalize() will be the funnel where all these events shall be processed in a consistent manner. When the message is completed via lnet_complete_msg_locked(), the error is checked and the proper behavior as described above is executed.

Peer_timeout

In the cases when a GET or a PUT transaction is initiated an associated deadline needs to be tagged to the corresponding transaction. This deadline indicates how long LNet should wait for a REPLY or an ACK before it times out the entire transaction.

A new thread is required to check if a transaction deadline has expired. OW: Can a timer do this? Or is one timer per message too resource-intensive? If a queue is used, then ideally new messages can simply be added to the tail, with their deadline always >= the current tail. With the queue sorted by deadline the checker thread can look at the deadline of the message at the head of the tail to determine how long it sleeps.

When a transaction deadline expires an appropriate event is generated towards PTLRPC.

When a the REPLY or the ACK is received the message is removed from the check queue of the thread and success event is generated towards PTLRPC.

Within a transaction deadline, if there is a determination that the GET or PUT message failed to be sent to the next-hop then the GET or PUT can be resent.

OW: How is this deadline determined? Naming this section peer_timeout suggests you want to use that? Conceptually we can distinguish between an LNet transaction timeout and an LNet peer timeout.

Resend Window

Resends are terminated when the peer_timeout for a message expires.

Resends should also terminate if all local_nis and/or peer_nis are in bad health. New messages can still use paths that have less than optimal health.

A message is resent after the LND transmit deadline expires, or on failure return code. Both these paths are handled in the same manner, since a transmit deadline triggers a call to lnet_finalize(). Both inline and asynchronous errors also endup in lnet_finalize().

Therefore the least number of transmits = peer_timeout / LND transmit deadline.

Depending on the frequency of errors, LNet may do more re-transmits. LNet will stop re-transmitting and declare a peer dead, if the peer_timeout expires or all the different paths have been tried with no success.

In the default case where LND transmit timeout is set to 50 seconds and the peer_timeout is set to 180 seconds, then LNet will re-transmit 3 times before it declares the peer dead.

peer_timeout can be increased to fit in more re-transmits or LND transmit timeout can be decreased.

Alexey Lyashkov made a presentation at LAD 16 that outlines the best values for all Lustre timeouts. It can be accessed here.

Locking

MD is always protected by the lnet_res_lock, which is CPT specific.

Other data structures such as the_lnet.ln_msg_containers, peer_ni, local ni, etc are protected by the lnet_net_lock.

The MD should be kept intact during the resend procedure. If there is a failure to resend then the MD should be released and message memory freed.

Selection Algorithm with Health

Algorithm Parameters

| Parameter | Values | |

| SRC NID | Specified (A) | Not specified (B) |

| DST NID | local (1) | not local (2) |

| DST NID | MR ( C ) | NMR (D) |

Note that when communicating with an NMR peer we need to ensure that the source NI is always the same: there are a few places where the upper layers use the src nid from the message header to determine its originating node, as opposed to using something like a UUID embedded in the message. This means when sending to an NMR node we need to pick a NI and then stick with that going forward.

Note: When sending to a router that scenario boils down to considering the router as the next-hop peer. The final destination peer NIs are no longer considered in the selection. The next-hop can then be MR or non-MR and the code will deal with it accordingly.

A1C - src specified, local dst, mr dst

- find the local ni given src_nid

- if no local ni found fail

- if local ni found is down, then fail

- find peer identified by the dst_nid

- select the best peer_ni for that peer

- take into account the health of the peer_ni (if we just demerit the peer_ni it can still be the best of the bunch. So we need to keep track of the peer_nis/local_nis a message was sent over, so we don't revisit the same ones again. This should be part of the message)

- If this is a resend and the resend peer_ni is specified, then select this peer_ni if it is healthy, otherwise continue with the algorithm.

- if this is a resend, do not select the same peer_ni again unless no other peer_nis are available and that peer_ni is not in a HARD_ERROR state.

A2C - src specified, route to dst, mr dst

- find local ni given src_nid

- if no local ni found fail

- if local ni found is down, then fail

- find router to dst_nid

- If no router present then fail.

- find best peer_ni (for the router) to send to

- take into account the health of the peer_ni

- If this is a resend and the resend peer_ni is specified, then select this peer_ni if it is healthy, otherwise continue with the algorithm.

- If this is a resend and the peer_nis is not specified, do not select the same peer_ni again. The original destination NID can be found in the message.

- Keep trying to send to the peer_ni even if it has been used before, as long as it is not in a HARD_ERROR state.

A1D - src specified, local dst, nmr dst

- find local ni given src nid

- if no local_ni found fail

- if local ni found is down, then fail

- find peer_ni using dst_nid

- send to that peer_ni

- If this is a resend retry the send on the peer_ni unless that peer_ni is in a HARD_ERROR state, then fail.

A2D - src specified, route to dst, nmr dst

- find local_ni given the src_nid

- if no local_ni found fail

- if local ni found is down, then fail

- find router to go through to that peer_ni

- send to the NID of that router.

- If this is a resend retry the send on the peer_ni unless that peer_ni is in a HARD_ERROR state, then fail.

B1C - src any, local dst, mr dst

- select the best_ni to send from, by going through all the local_nis that can reach any of the networks the peer is on

- consider local_ni health in the selection by selecting the local_ni with the best health value.

- If this is a resend do not select a local_ni that has already been used.

- select the best_peer_ni that can be reached by the best_ni selected in the previous step

- If this is a resend and the resend peer_ni is specified, then select this peer_ni if it is healthy, otherwise continue with the algorithm.

- If this is a resend and the resend peer_ni is not specified do not consider a peer_ni that has already been used for sending as long as there are other peer_nis available for selection. Loop around and re-use peer-nis in round robin.

- peer_nis that are selected cannot be in HARD_ERROR state.

- send the message over that path.

B2C - src any, route to dst, mr dst

- find the router that can reach the dst_nid

- find the peer for that router. (The peer is MR)

- go to B1C

B1D - src any, local dst, nmr dst

- find

peer_niusingdst_nid(non-MR, so this is the onlypeer_nicandidate)- no issue if

peer_niis healthy - try this

peer_nieven if it is unhealthy if this is the 1st attempt to send this message - fail if resending to an unhealthy

peer_ni

- no issue if

- pick the preferred local_NI for this

peer_niif set- If the preferred local_NI is not healthy, fail sending the message and let the upper layers deal with recovery.

- otherwise if preferred local_NI is not set, then pick a healthy local NI and make it the preferred NI for this

peer_ni

- send over this path

B2D - src any, route dst, nmr dst

find route to

dst_nidfind

peer_niof routerno issue if

peer_niis healthytry this

peer_nieven if it is unhealthy if this is the 1st attempt to send this messagefail if resending to an unhealthy

peer_ni

pick the preferred local_NI for the

dst_nidif setIf the preferred local_NI is not healthy, fail sending the message and let the upper layers deal with recovery.

otherwise if preferred local_NI is not set, then pick a healthy local NI and make it the preferred NI for this

peer_ni

send over this path

Resend Behavior

LNet will keep attempting to resend a message across different local/remote NIs as long as the interfaces are only in "soft" failure state. Interfaces are demerited when we fail to send over them due to a timeout. This is opposed to a hard failure which is reported by the underlying HW indicating that this interface can no longer be used for sending and receiving.

LNet will terminate resends of a message in one of the following conditions

- Peer timeout expires

- No interfaces available that can be used.

- A message is sent successfully.

For hard failures there needs to be a method to recover these interfaces. This can be done through a ping of the interface whether it is local or remote, since that ping will tell us if an interface is up or down.

The router checker infrastructure currently does this exact job for routers. This infrastructure can be expanded to also query the local or remote NIs which are down.

Selection of the local_ni or peer_ni will be dependent on the following criteria:

- Has the best health value

- skip interfaces in HARD_ERROR state

- closest NUMA (for local interfaces)

- most available credits

- Round Robin.

The interfaces which have soft failures will be demerited so it will naturally be selected as a last option.

Work Items

- refactor lnet_select_pathway() as described above.

- Health Value Maintenance/Demerit system

- Selection based on Health Value and not resending over already used interfaces unless non are available.

- Handling the new events in IBLND and passing them to LNet

- Handling the new events in SOCKLND and passing them to LNet

- Adding LNet level transaction timeout (or reuse the peer timeout) and cancelling a resend on timeout

- Handling timeout case in ptlrpc

Patches

- refactor lnet_select_pathway as described above()

- Add health values to local_ni

- Modify selection to make use of local_ni health values.

- Add explicit constraint in the selection to fail a re-send if no local_ni is in optimal health

- Handle explicit port down/up events

- Handle local interface failure on send and update health value then resend

- Add health values to peer_ni

- Add explicit constraint in the selection to fail a re-send if no remote_ni is in optimal health

- Handle remote interface failure on send and update health value then resend

- Modify selection to make use of peer_ni health values.

- Handle LND tx timeout due to being stuck on the queues for too long.

- Handle LND tx timeout due to remote rejection

- Handle LND tx timeout due to no tx completion

- Add an Event timeout towards upper layers (PTLRPC) when a transaction has failed to complete. IE LNET_ACK_MSG, or LNET_REPLY_MSG are not received.

- Handle the transaction timeout event in ptlrpc.

O2IBLND Detailed Discussion

Overview

There are two types of events to account for:

- Events on the RDMA device itself

- Events on the cm_id

Both events should be monitored because they provide information on the health of the device and connection respectively.

ib_register_event_handler() can be used to register a handler to handle events of type 1.

a cm_callback can be register with the cm_id to handle RMDA_CM events.

There is a group of events which indicate a fatal error

RDMA Device Events

Below are the events that could occur on the RDMA device. Highlighted in BOLD RED are the events that should be handled for health purposes.

- IB_EVENT_CQ_ERR

- IB_EVENT_QP_FATAL

- IB_EVENT_QP_REQ_ERR

- IB_EVENT_QP_ACCESS_ERR

- IB_EVENT_COMM_EST

- IB_EVENT_SQ_DRAINED

- IB_EVENT_PATH_MIG

- IB_EVENT_PATH_MIG_ERR

- IB_EVENT_DEVICE_FATAL

- IB_EVENT_PORT_ACTIVE

- IB_EVENT_PORT_ERR

- IB_EVENT_LID_CHANGE

- IB_EVENT_PKEY_CHANGE

- IB_EVENT_SM_CHANGE

- IB_EVENT_SRQ_ERR

- IB_EVENT_SRQ_LIMIT_REACHED

- IB_EVENT_QP_LAST_WQE_REACHED

- IB_EVENT_CLIENT_REREGISTER

- IB_EVENT_GID_CHANGE

Communication Events

Below are the events that could occur on a connection. Highlighted in BOLD RED are the events that should be handled for health purposes.

RDMA_CM_EVENT_ADDR_RESOLVED: Address resolution (rdma_resolve_addr) completed successfully.

RDMA_CM_EVENT_ADDR_ERROR: Address resolution (rdma_resolve_addr) failed.

RDMA_CM_EVENT_ROUTE_RESOLVED: Route resolution (rdma_resolve_route) completed successfully.

RDMA_CM_EVENT_ROUTE_ERROR: Route resolution (rdma_resolve_route) failed.

RDMA_CM_EVENT_CONNECT_REQUEST: Generated on the passive side to notify the user of a new connection request.

RDMA_CM_EVENT_CONNECT_RESPONSE: Generated on the active side to notify the user of a successful response to a connection request. It is only generated on rdma_cm_id's that do not have a QP associated with them.

RDMA_CM_EVENT_CONNECT_ERROR: Indicates that an error has occurred trying to establish or a connection. May be generated on the active or passive side of a connection.

RDMA_CM_EVENT_UNREACHABLE: Generated on the active side to notify the user that the remote server is not reachable or unable to respond to a connection request. If this event is generated in response to a UD QP resolution request over InfiniBand, the event status field will contain an errno, if negative, or the status result carried in the IB CM SIDR REP message.

RDMA_CM_EVENT_REJECTED: Indicates that a connection request or response was rejected by the remote end point. The event status field will contain the transport specific reject reason if available. Under InfiniBand, this is the reject reason carried in the IB CM REJ message.

RDMA_CM_EVENT_ESTABLISHED: Indicates that a connection has been established with the remote end point.

RDMA_CM_EVENT_DISCONNECTED: The connection has been disconnected.

RDMA_CM_EVENT_DEVICE_REMOVAL: The local RDMA device associated with the rdma_cm_id has been removed. Upon receiving this event, the user must destroy the related rdma_cm_id.

RDMA_CM_EVENT_MULTICAST_JOIN: The multicast join operation (rdma_join_multicast) completed successfully.

RDMA_CM_EVENT_MULTICAST_ERROR: An error either occurred joining a multicast group, or, if the group had already been joined, on an existing group. The specified multicast group is no longer accessible and should be rejoined, if desired.

RDMA_CM_EVENT_ADDR_CHANGE: The network device associated with this ID through address resolution changed its HW address, eg following of bonding failover. This event can serve as a hint for applications who want the links used for their RDMA sessions to align with the network stack.

RDMA_CM_EVENT_TIMEWAIT_EXIT: The QP associated with a connection has exited its timewait state and is now ready to be re-used. After a QP has been disconnected, it is maintained in a timewait state to allow any in flight packets to exit the network. After the timewait state has completed, the rdma_cm will report this event.

Health Handling

Handling Asynchronous Events

- A callback mechanism should be provided by LNet to the LND to report failure events

- Some translation matrix from LND specific errors to LNet specific errors should be created

- Each LND would create the mapping

- Whenever an event occurs the indicates a fatal error on the device the LNet callback should be called.

- LNet should transition the local NI or remote NI appropriately and take measures to close the connections on that specific device.

Handling Errors on Sends

- If a request to send a message ends in an error: Example a connection error (as seen with the wrong device responding to ARP), then LNet should pick another local device to send from.

- There are a class of errors which indicate a problem in Local NI

- RDMA_CM_EVENT_DEVICE_REMOVAL - This device is no longer present. Should never be used.

- There are a class of errors which indicate a problem in remote NI

- RDMA_CM_EVENT_ADDR_ERROR - The remote address is errnoeous. Should not be used.

- RDMA_CM_EVENT_ADDR_RESOLVED with an error. The remote address can not be resolved

- RDMA_CM_EVENT_ROUTE_ERROR - No route to remote address. Should result in the peer_ni not to be used. But a retry can be done a bit later via time.

- RDMA_CM_EVENT_UNREACHABLE - Remote side is unreachable. Retry after a while.

- RDMA_CM_EVENT_CONNECT_ERROR - problem with connection. Retry after a while.

- RDMA_CM_EVENT_REJECTED - Remote side is rejecting connection. Retry after a while.

- RDMA_CM_EVENT_DISCONNECTED - Move outstanding operations to a different pair if available.

Handling Timeout

This is probably the trickiest situation. Timeout could occur because of network congestion, or because the remote side is too busy, or because it's dead, or hung, etc.

Timeouts are being kept in the LND (o2iblnd) on the transmits. Every transmit which is queued is assigned a deadline. If it expires then the connection on which this transmit is queued, is closed.

peer_timout can be set in routed and non-routed scenario, which provides information on the peer.

Timeouts are also being kept at ptlrpc. These are rpc timeouts.

Refer to section 32.5 in the manual for a description of how RPC timeouts work.

Also refer to section 27.3.7 for LNet Peer Health information.

Given the presence of various timeouts, adding yet another timeout on the message, will further complicate the configuration, and possibly cause further hard to debug issues.

One option to consider is to use the peer_timout feature to recognize when peer_nis are down, and update the peer_ni health information via this mechanism. And let the LND and RPC timeouts take care of further resends.

High Level Design

Callback Mechanism

[Olaf: bear in mind that currently the LND already reports status to LNet through lnet_finalize()]

- Add an LNet API:

- lnet_report_lnd_error(lnet_error_type type, int errno, struct lnet_ni *ni, struct lnet_peer_ni *lpni)

- LND will map its internal errors to lnet_error_type enum.

enum lnet_error_type {

LNET_LOCAL_NI_DOWN, /* don't use this NI until you get an UP */

LNET_LOCAL_NI_UP, /* start using this NI */

LNET_LOCAL_NI_SEND_TIMOUT, /* demerit this NI so it's not selected immediately, provided there are other healthy interfaces */

LNET_PEER_NI_ADDR_ERROR, /* The address for the peer_ni is wrong. Don't use this peer_NI */

LNET_PEER_NI_UNREACHABLE, /* temporarily don't use the peer NI */

LNET_PEER_NI_CONNECT_ERROR, /* temporarily don't use the peer NI */

LNET_PEER_NI_CONNECTION_REJECTED /* temporarily don't use the peer NI */

};

- Although some of the actions LNet will take is the same for different errors, it's still a good idea to keep them separate for statistics and logging.

- on LNET_LOCAL_NI_DOWN set the ni_state to STATE_FAILED. In the selection algorithm this NI will not be picked.

- on LNET_LOCAL_NI_UP set the ni_state to STATE_ACTIVE. In the selection algorithm this NI will be selected.

- Add a state in the peer_ni. This will indicate if it usable or not.

- on LNET_PEER_NI_ADDR_ERROR set the peer_ni state to FAILED. This peer_ni will not be selected in the selection algorithm.

- Add a health value (int). 0 means it's healthy and available for selection.

- on any LNet_PEER_NI_[UNREACHABLE | CONNECT_ERROR | CONNECT_REJECTED] decrement this value.

- That value indicates how long before we use it again.

- A time before use in jiffies is stored. The next time we examine this peer_NI for selection, we take a look at that time. If it has been passed we select it, but we do not increment this value. The value is set to 0 only if there is a successful send to this peer_ni.

- The net effect is that if we have a bad peer_ni, the health value will keep getting decremented, which will mean it'll take progressively longer to reuse it.

- This algorithm is in effect only if there are multiple interfaces, and some of them are healthy. If none of them are healthy (IE the health value is negative), then select the least unhealthy peer_ni (the one with greatest health value).

- The same algorithm can be used for local NI selection

Timeout Handling

LND TX Timeout

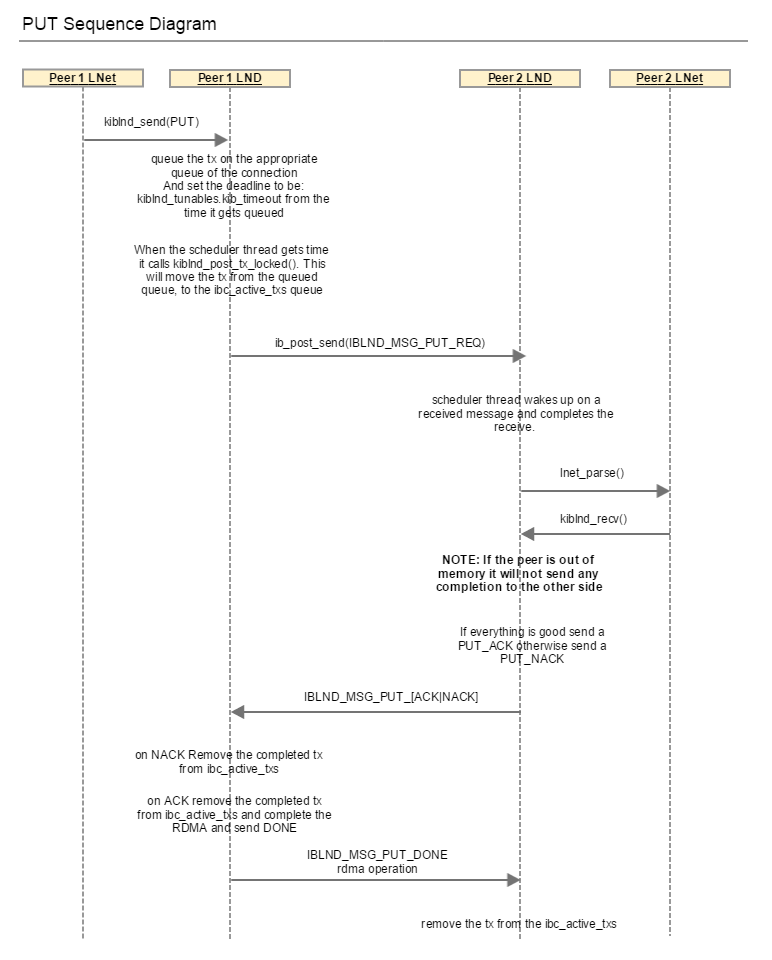

PUT

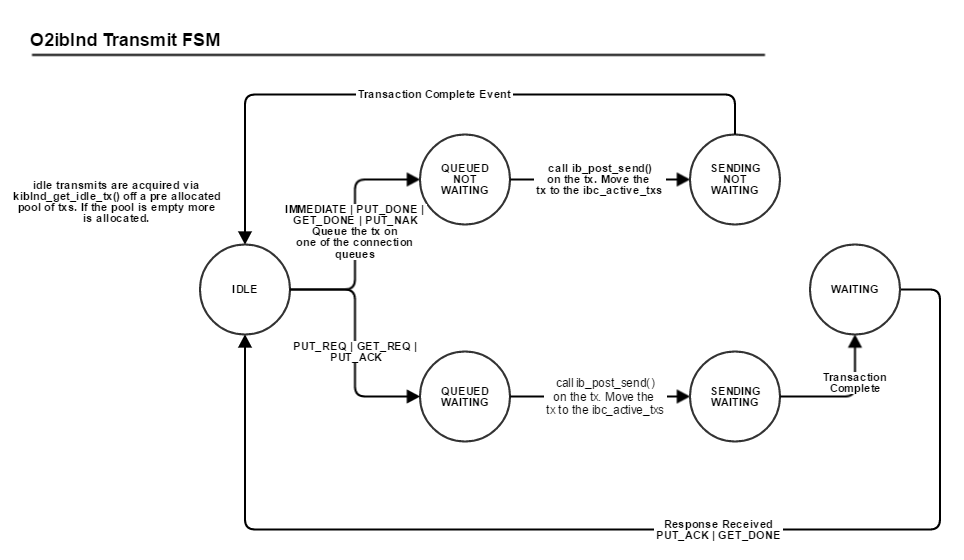

As shown in the above diagram whenever a tx is queued to be sent or is posted but haven't received confirmation yet, the tx_deadline is still active. The scheduler thread checks the active connections for any transmits which has passed their deadline, and then it closes those connections and notifies LNet via lnet_notify().

The tx timeout is cancelled when in the call kiblnd_tx_done(). This function checks 3 flags: tx_sending, tx_waiting and tx_queued. If all of them are 0 then the tx is closed as completed. The key flag to note is tx_waiting. That flag indicates that the tx is waiting for a reply. It is set to 1 in kiblnd_send, when sending the PUT_REQ or GET_REQ. It is also set when sending the PUT_ACK. All of these messages expect a reply back. When the expected reply is received then tx_waiting is set to 0 and kiblnd_tx_done() is called, which eventually cancels the tx_timeout, by basically removing the tx from the queues being checked for the timeout.

The notification in the LNet layer that the connection has been closed can be used by MR to attempt to resend the message on a different peer_ni.

<TBD: I don't think that LND attempts to automatically reconnect to the peer if the connection gets torn down because of a tx_timeout.>

TX timeout is exactly what we need to determine if the message has been transmitted successfully to the remote side. If it has not been transmitted successfully we can attempt to resend it on different peer_nis until we're either successful or we've exhausted all of the peer_nis.

The reason for the TX timeout is also important:

- TX timeout can be triggered because the TX remains on one of the outgoing queues for too long. This would indicate that there is something wrong with the local NI. It's either too congested or otherwise problematic. This should result in us trying to resend using a different local NI but possibly to the same peer_ni.

- TX timeout can be triggered because the TX is posted via (ib_post_send()) but it has not completed. In this case we can safely conclude that the peer_ni is either congested or otherwise down. This should result in us trying to resent to a different peer_ni, but potentially using the same local NI.

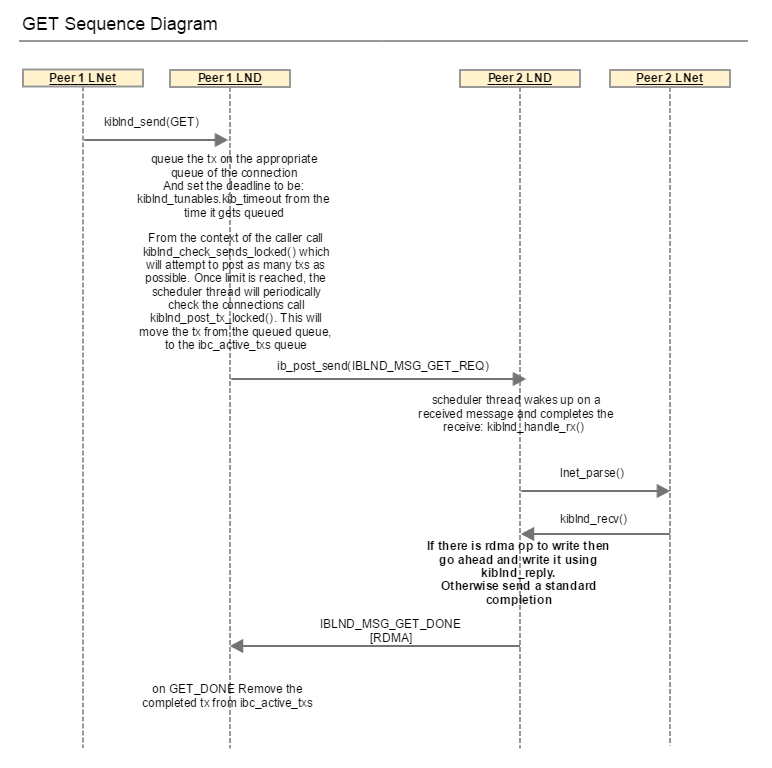

GET

After the completion of an o2iblnd tx ib_post_send(), a completion event is added to the completion queue. This triggers kiblnd_complete to be called. If this is an IBLND_WID_TX then kiblnd_tx_complete() is called, which calls kiblnd_tx_done() if the tx is not sending, waiting or queued. In this case the tx_timeout is closed.

In summary, the tx_timeout serves to ensure that messages which do not require an explicit response from the peer are completed on the tx event added by M|OFED to the completion queue. And it also serves to ensure that any messages which require an explicit reply to be completed receive that reply within the tx_timout.

PUT and GET in Routed Configuration

When a node receives a PUT request, the O2IBLND calls lnet_parse() to deal with it. lnet_parse() calls lnet_parse_put(), which matches the MD and initiates a receive. This ends up calling into the LND, kiblnd_recv(), which would send an IBLND_MSG_PUT_[ACK|NAK]. This allows the sending peer LND to know that the PUT has been received, and let go of it's TX, as shown below. On receipt of the ACK|NAK, the peer sends a IBLND_MSG_PUT_DONE, and initates the RDMA operation. Once the tx completes, kiblnd_tx_done() is called which will then call lnet_finalize(). For the PUT, LNet will end sending an LNET_MSG_ACK, if it needs to (look at lnet_parse_put() for the condition on which LNET_MSG_ACK is sent).

In the case of a GET, on receipt of IBLND_MSG_GET_REQ, lnet_parse() -> lnet_parse_get() -> kiblnd_recv(). If a there is data to be sent back, then the LND sends and RDMA operation with IBLND_MSG_GET_DONE, or just the DONE.

The point I'm trying to illustrate here is that there are two levels of messages. There are the LND messages which confirm that a single LNET message has been received by the peer. And there there are the LNet level messages, such as LNET_MSG_ACK and LNET_MSG_REPLY. These two in particular are in response to the LNET_MSG_PUT and LNET_MSG_GET respectively. At the LND level IBLND_MSG_IMMEDIATE is used.

In a routed configuration, the entire LND handshake between the peer and the router is completed. However the LNET level messages like LNET_MSG_ACK and LNET_MSG_REPLY are sent by the final destination, and not by the router. The router simply forwards on the message it receives.

The question that the design needs to answer is this: Should LNet be concerned with resending messages if LNET_MSG_ACK or LNET_MSG_REPLY are not received for LNET_MSG_PUT and LNET_MSG_GET respectively?

At this point (pending further discussion) it is my opinion that it should not. I argue that the decision to get LNET to send the LNET_MSG_ACK or LNET_MSG_REPLY implicitly is actually a poor one. These messages are in direct respons to direct requests by upper layers like RPC. What should've been happening is that when LNET receives an LNET_MSG_[PUT|GET], an event should be generated to the requesting layer, and the requesting layer should be doing another call to LNet, to send the LNET_MSG_[ACK|REPLY]. Maybe it was done that way in order no to hold on resources more than it should, but symantically these messages should belong to the upper layer. Furthermore, the events generated by these messages are used by the upper layer to determine when to do the resends of the PUT/GET. For these reasons I believe that it is a sound decision to only task LNet with attempting to send an LNet message over a different local_ni/peer_ni only if this message is not received by the remote end. This situation is caught by the tx_timeout.

O2IBLND TX Lifecycle

In order to understand fully how the LND transmit timeout can be used for resends, we need to have an understanding of the transmit life cycle shown above.

This shows that the timeout depends on the type of request being sent. If the request expects a response back then the tx_timeout covers the entire transaction lifetime. Otherwise it covers up until the transmit complete event is queued on the completion queue.

Currently, if the transmit timeout is triggered the connection is closed to ensure that all RDMA operations have ceased. LNet is notified on error and if the modprobe parameter auto_down is set (which it is by default) the peer is marked down. In lnet_select_pathway() lnet_post_send_locked() is called. One of the checks it does is to make sure that the peer we're trying to send to is alive. If not, message is dropped and -EHOSTUNREACH is returned up the call chain.

In lnet_select_pathway() if lnet_post_send_locked() fails, then we ought to marke the health of the peer and attempt to select a different peer_ni to send to.

NOTE, currently we don't know why the peer_ni is marked down. As mentioned above the tx_timeout could be triggered for several reasons. Some reasons indicate a problem on the peer side, IE not receiving a response or a transmit complete. Other reasons could indicate local problems, for example the tx never leaves the queued state. Depending on the reason for the tx_timeout LNet should react differently in it's next round of interface selection.

Peer timeout and recovery model

- On transmit timeout kiblnd notifies LNet that the peer has closed due to an error. This goes through the lnet_notify path.

- The peer aliveness at the LNet layer is set to 0 (dead), and the last alive

- In IBLND whenever a message is received successfully, transmitted successfully or a connection is completed (whether it is successful or has been rejected) then the last alive time of the peer is set.

- At the LNet layer for a non router node, lnet_peer_aliveness_enabled() will always return 0:

#define lnet_peer_aliveness_enabled(lp) (the_lnet.ln_routing != 0 && \ ((lp)->lpni_net) && \ (lp)->lpni_net->net_tunables.lct_peer_time_out > 0)

In effect, the aliveness of the peer is not considered at all if the node is not a router.

- This can remain the same since the health of the peer will be considered in lnet_select_pathway() before this is considered.

- In fact if the logic for the health of the peer is done in lnet_select_pathway(), then the logic in lnet_post_send_locked() can be removed. A peer will always be as healthy as possible by the time the flow hits lnet_post_send_locked()

- If the node is not a router, then a peer will always be tried irregardless of its health. If it is a router then once every second the peer will be queried to see if it's alive or not.

- TBD: In o2iblnd kiblnd_query looks up the peer and then returns the last_alive of hte peer. However, there is code "if (peer_ni == NULL) kiblnd_launch_tx(ni, NULL, nid)". This code will attempt creating and connecting to the peer, which should allow us to discover if the peer is alive. However, as far as I know peer_ni is never removed from the hash. So if it's already an existing peer which died, then the call to kiblnd_launch_tx() will never be made, and we'll never discover if the peer came back to life.

- In socklnd, socknal_query() works differently. It actually attempts to connect to the peer again, within a timeout. This leads the router to discover that the peer is healthy and start using it again.

Health Revisited

There are different scenarios to consider with Health:

- Asynchronous events which indicate that the card is down

- Immediate failures when sending

- Failures reported by the LND

- Failures that occur because peer is down. Although this class of failures could be moved into the selection algorithm. IE do not pick peers_nis which are not alive.

- TX timeout cases.

- Currently connection is closed and peer is marked down.

- This behavior should be enhanced to attempt to resend on a different local NI/peer NI, and mark the health of the NI

TX Timeouts in the presence of LNet Routers

Communication with a router adheres to the above details. Once the current hop is sure that the message has made it to the next hop, LNet shouldn't worry about resends. Resends are only to ensure that the message LNet is tasked to send makes it to the next hop. The upper layer RPC protocol makes sure that RPC messages are retried if necessary.

Each hop's LNet will do a best effort in getting the message to the following hop. Unfortunately, there is no feedback mechanism from a router to the originator to inform the originator that a message has failed to send, but I believe this is unnecessary and will probably increase the complexity of the code and the system in general. Rule of thumb should be that each hop only worries about the immediate next hop.

SOCKLND Detailed Discussion

TBD

Old

Implementation Specifics

Events that are triggered asynchronously, without initiating a message, such as port down, port up, rdma device removed, shall be handled via a new LNet/LND API that shall be added.

In the other cases, lnet_ni_send() calls into the LND via the lnd_send() callback provided. If the return code is failure lnet_finalize() is called to finalize the message.

lnet_finalize() takes the return code as an input parameter. The above behavior should be implemented in lnet_finalize() since this is the main entry into the LNet module via the LNDs as well.

lnet_finalize() detaches the MD in preparation of completing the message. Once the MD is detached it can be re-used. Therefore, if we are to re-send the message then the MD shouldn't be detached at this point.

lnet_complete_msg_locked() should be modified to manage the local interface health, and decide whether the message should be resent or not. If the message can not be resent due to no available local interfaces then the MD can be detached and the message can be freed.

Currently lnet_select_pathway() iterates through all the local interfaces on a particular peer identified by the NID to send to. In this case we would want to restrict the resend to go to the same peer_ni, but on a different local interface.

This approach lends itself to breaking out the selection of the local interface from lnet_select_pathway(), leading to the following logic:

lnet_ni lnet_get_best_ni(local_net, cur_ni, md_cpt)

{

local_net = get_local_net(peer_net)

for each ni in local_net {

health_value = lnet_local_ni_health(ni)

/* select the best health value */

if (health_value < best_health_value)

continue

distance = get_distance(md_cpt, dev_cpt)

/* select the shortest distance to the MD */

if (distance < lnet_numa_range)

distance = lnet_numa_range

if (distance > shortest_distance)

continue

else if distance < shortest_distance

distance = shortest_distance

/* select based on the most available credits */

else if ni_credits < best_credits

continue

/* if all is equal select based on round robin */

else if ni_credits == best_credits

if best_ni->ni_seq <= ni->ni_seq

continue

}

}

/*

* lnet_select_pathway() will be modified to add a peer_nid parameter.

* This parameter indicates that the peer_ni is predetermined, and is

* identified by the NID provided. The peer_nid parameter is the

* next-hop NID, which can be the final destination or the next-hop

* router. If that peer_NID is not healthy then another peer_NID is

* selected as per the current algorithm. This will force the

* algorithm to prefer the peer_ni which was selected in the initial

* message sending. The peer_ni NID is stored in the message. This

* new parameter extends the concept of the src_nid, which is provided

* to lnet_select_pathway() to inform it that the local NI is

* predetermined.

*/

/* on resend */

enum lnet_error_type {

LNET_LOCAL_NI_DOWN, /* don't use this NI until you get an UP */

LNET_LOCAL_NI_UP, /* start using this NI */

LNET_LOCAL_NI_SEND_TIMEOUT, /* demerit this NI so it's not selected immediately, provided there are other healthy interfaces */

LNET_PEER_NI_NO_LISTENER, /* there is no remote listener. demerit the peer_ni and try another NI */

LNET_PEER_NI_ADDR_ERROR, /* The address for the peer_ni is wrong. Don't use this peer_NI */

LNET_PEER_NI_UNREACHABLE, /* temporarily don't use the peer NI */

LNET_PEER_NI_CONNECT_ERROR, /* temporarily don't use the peer NI */

LNET_PEER_NI_CONNECTION_REJECTED /* temporarily don't use the peer NI */

};

static int lnet_handle_send_failure_locked(msg, local_nid, status)

{

switch (status)

/*

* LNET_LOCAL_NI_DOWN can be received without a message being sent.

* In this case msg == NULL and it is sufficient to update the health

* of the local NI

*/

case LNET_LOCAL_NI_DOWN:

LASSERT(!msg);

local_ni = lnet_get_local_ni(msg->local_nid)

if (!local_ni)

return

/* flag local NI down */

lnet_set_local_ni_health(DOWN)

break;

case LNET_LOCAL_NI_UP:

LASSERT(!msg);

local_ni = lnet_get_local_ni(msg->local_nid)

if (!local_ni)

return

/* flag local NI down */

lnet_set_local_ni_health(UP)

/* This NI will be a candidate for selection in the next message send */

break;

...

}

static int lnet_complete_msg_locked(msg, cpt)

{

status = msg->msg_ev.status

if (status != 0)

rc = lnet_handle_send_failure_locked(msg, status)

if rc == 0

return

/* continue as currently done */

}

Remote Interface Failure

A remote interface can be considered problematic under multiple scenarios:

- Address is wrong

- Route can not be determined

- Connection can not be established

- Connection was rejected due to incompatible parameters

Desired Behavior

When a remote interface fails the following actions take place:

- The remote interface health is updated

- Failure statistics incremented

- A resend is issued on a different remote interface if there is one available.

- If no other remote interface is present then the send fails.

There are several ways a remote interface can recover:

- The remote interface is retried as a destination because there is no alternative available, and no error results.

- The remote interface is periodically probed by a helper thread. An interesting wrinkle is that there is no reason to probe the remote interface unless there is traffic flowing to the peer through other paths. (LNet doesn't care about the state of a remote node that the local node isn't talking to anyway.)

Implementation Specifics

In all these cases a different peer_ni should be tried if one exists. lnet_select_pathway() already takes src_nid as a parameter. When resending due to one of these failures src_nid will be set to the src_nid in the message that is being resent.

static int lnet_handle_send_failure_locked(msg, local_nid, status)

{

switch (status)

...

case LNET_PEER_NI_ADDR_ERROR:

lpni->stats.stat_addr_err++

goto peer_ni_resend

case LNET_PEER_NI_UNREACHABLE:

lpni->stats.stat_unreacheable++

goto peer_ni_resend

case LNET_PEER_NI_CONNECT_ERROR:

lpni->stats.stat_connect_err++

goto peer_ni_resend

case LNET_PEER_NI_CONNECTION_REJECTED:

lpni->stats.stat_connect_rej++

goto peer_ni_resend

default:

/* unexpected failure. failing message */

return

peer_ni_resend

lnet_send(msg, src_nid)

}