Introduction

It is sometimes desirable to fine tune the selection of local/remote NIs used for communication. For example currently if there are two networks an OPA and a MLX network, both will be used. Especially if the traffic volume is low the credits criteria will be equivalent between the nodes, and both networks will be used in round robin. However, the user might want to use one network for all traffic and keep the other network free unless the other network goes down.

User Defined Selection Policies (UDSP) will allow this type of control.

UDSPs are configured from lnetctl via either command line or YAML config files and then passed to the kernel. Policies are applied to all local networks and remote peers then stored in the kernel. During the selection process the policies are examined as part of the selection algorithm.

UDSP Rules Types

Outlined below are the UDSP rule types

- Network rules

- NID rules

- NID Pair rules

- NET Pair rules

- Router rules

Network Rules

These rules define the relative priority of the networks against each other. 0 is the highest priority. Networks with higher priorities will be selected during the selection algorithm, unless the network has no healthy interfaces. If there exists an interface on another network which can be used and its healthier than any which are available on the current network, then that one will be used. Health will always trump all other criteria.

Syntax

lnetctl policy add --src *@<net type> --<action-type> <context dependent value> ex: lnetctl policy add --src *@o2ib1 --priority 0

NID Rules

These rules define the relative priority of individual NIDs. 0 is the highest priority. Once a network is selected the NID with the highest priority is preferred. Note that NID priority is prioritized below health. For example, if there are two NIDs, NID-A and NID-B. NID-A has higher priority but lower health value, NID-B will still be selected. In that sense the policies are there as a hint to guide the selection algorithm.

Syntax

lnetctl policy add --src <ip>@<net type> --<action-type> <context dependent value> ex: lnetctl policy add --src 10.10.10.2@o2ib1 --priority 1

NID Pair Rules

These rules define the relative priority of paths. 0 is the highest priority. Once a destination NID is selected the source NID with the highest priority is selected to send from.

Syntax

lnetctl policy add --src <ip>@<net type> --dst <ip>@<net type> --<action-type> <context dependent value> ex: lnetctl policy add --src 10.10.10.2@o2ib1 --dst 10.10.10.4@o2ib1 --priority 1

Net Pair Rules

Net Pair Rules is a generalization of the NID Pair Rules. It attempts to give a priority for all NIDs on two different networks. This can be used to control traffic heading to a remote network

Syntax

lnetctl policy add --src *@<net type> --dst *@<net type> --<action-type> <context dependent value> ex: lnetctl policy add --src *@o2ib1 --dst *@o2ib2 --priority 0

Router Rules

Router Rules define which set of routers to use. When defining a network there could be paths which are more optimal than others. To have more control over the path traffic takes, admins configure interfaces on different networks, and split up the router pools among the networks. However, this results in complex configuration, which is hard to maintain and error prone. It is much more desirable to configure all interfaces on the same network, and then define which routers to use when sending to a remote peer. Router Rules allow this functionality

Syntax

lnetctl policy add --dst <ip>@<net type> --rte <ip>@<net type> --<action-type> <context dependent value> ex: lnetctl policy add --dst 10.10.10.2@o2ib3 --rte 10.10.10.[5-8]@o2ib --priority 0

User Interface

Command Line Syntax

As illustrated in the example above all policies can be specified using the following syntax:

lnetctl policy <add | del | show> --src: ip2nets syntax specifying the local NID to match --dst: ip2nets syntax specifying the remote NID to match --rte: ip2nets syntax specifying the router NID to match --priority: Priority to apply to rule matches --idx: Index of where to insert the rule. By default it appends to the end of the rule list

As of the time of this writing only "priority" action shall be implemented. However, it is feasible in the future to implement different actions to be taken when a rule matches. For example, we can implement a "redirect" action, which redirects traffic to another destination. Yet another example is an "lawful intercept" or "mirror" action, which mirrors messages to a different destination, this might be useful for keeping a standby server updated with all information going to the primary server. An lawful intercept action allows personnel authorized by a Law Enforcement Agency (LEA) to intercept file operations from targeted clients and send the file operations to an LI Mediation Device.

YAML Syntax

udsp:

- src: <ip>@<net type>

dst: <ip>@<net type>

rte: <ip>@<net type>

idx: <unsigned int>

action:

- priority: <unsigned int>

Overview of Operations

There are three main operations which can be carried out on UDSPs either from the command line or YAML configuraiton: add, delete, show.

Add

The UI allows adding a new rule. With the use of the idx optional parameter, the admin can specifiy where in the rule chain the new rule should be added. By default the rule is appended to the list. Any other value will result in inserting the rule in that position.

When a new UDSP is added the entire UDSP set is re-evaluated. This means all Nets, NIs and peer NIs in the systems are traversed and the rules re-applied. This is an expensive operation, but given that UDSP management should be a rare operation, it shouldn't be a problem.

Delete

The UI allows deleting an existing UDSP using its index. The index can be shown using the show command. When a UDSP is deleted the entire UDSP set are re-evaluated. The Nets, NIs and peer NIs are traversed and the rules re-applied..

Show

The UI allows showing exisitng UDSPs. The format of the YAML output is as follows:

udsp:

- idx: <unsigned int>

src: <ip>@<net type>

dst: <ip>@<net type>

rte: <ip>@<net type>

action:

- priority: <unsigned int>

Design

All policies are stored in kernel space. All logic to add, delete and match policies will be implemented in kernel space. This complicates the kernel space processing. Arguably, policy maintenance logic is not core to LNet functionality. What is core is the ability to select source and destination networks and NIDs in accordance with user definitions.

Design Principles

Rule Storage

Rules shall be defined through user space and passed to the LNet module. LNet shall store these rules on a policy list. Once policies are added to LNet they will be applied on existing networks, NIDs and routers. The advantage of this approach is that rules are not strictly tied to the internal constructs, IE networks, NIDs or routers, but can be applied whenever the internal constructs are created and if the internal constructs are deleted then they remain and can be automatically applied at a future time.

This makes configuration easy since a set of rules can be defined, like "all IB networks priority 1", "all Gemini networks priority 2", etc, and when a network is added, it automatically inherits these rules.

Peers are normally not created explicitly by the admin. The ULP requests to send a message to a peer or the node receives an unsolicited message from a peer which results in creating a peer construct in LNet. It is feasible, especially for router policies, where we want to associate a specific set of routers to clients within a user defined range. Having the policies and matching occur in kernel aids in fulfilling this requirement.

Rule Application

Performance needs to be taken into account with this feature. It is not feasible to traverse the policy lists on every send operation. This will add unnecessary overhead. When rules are applied they have to be "flattened" to the constructs they impact. For example, a Network Rule is added as follows: o2ib priority 0. This rule gives priority for using o2ib network for sending. A priority field in the network will be added. This will be set to 0 for the o2ib network. As we traverse the networks in the selection algorithm, which is part of the current code, the priority field will be compared. This is a more optimal approach than examining the policies on every send to see if it we get any matches.

In Kernel Structures

/* lnet structure will keep a list of UDSPs */

struct lnet {

...

list_head ln_udsp_list;

...

}

/* each NID range is defined as net_id and an ip range */

struct lnet_ud_nid_descr {

__u32 ud_net_id;

list_head ud_ip_range;

}

/* UDSP action types */

enum lnet_udsp_action_type udsp_action_type {

EN_LNET_UDSP_ACTION_PRIORITY = 0,

EN_LNET_UDSP_ACTION_NONE = 1,

}

/*

* a UDSP rule can have up to three user defined NID descriptors

* - src: defines the local NID range for the rule

* - dst: defines the peer NID range for the rule

* - rte: defines the router NID range for the rule

*

* An action union defines the action to take when the rule

* is matched

*/

struct lnet_udsp {

list_head udsp_on_list;

lnet_ud_nid_descr *udsp_src;

lnet_ud_nid_describe *udsp_dst;

lnet_ud_nid_descr *udsp_rte;

enum lnet_udsp_action_type udsp_action_type;

union udsp_action {

__u32 udsp_priority;

};

}

/* The rules are flattened in the LNet structures as shown below */

struct lnet_net {

...

/* defines the relative priority of this net compared to others in the system */

__u32 net_priority;

...

}

struct lnet_ni {

...

/* defines the relative priority of this NI compared to other NIs in the net */

__u32 ni_priority;

...

}

struct lnet_peer_ni {

...

/* defines the relative peer_ni priority compared to other peer_nis in the peer */

__u32 lpni_priority;

/* defines the list of local NID(s) (>=1) which should be used as the source */

union lpni_pref {

lnet_nid_t nid;

lnet_nid_t *nids;

}

/* defines the list of router NID(s) to be used when sending to this peer NI */

lnet_nid_t *lpni_rte_nids;

...

}

/* UDSPs will be passed to the kernel via IOCTL */

#define IOC_LIBCFS_ADD_UDSP _IOWR(IOC_LIBCFS_TYPE, 106, IOCTL_CONFIG_SIZE)

/* UDSP will be grabbed from the kernel via IOCTL

#define IOC_LIBCFS_GET_UDSP _IOWR(IOC_LIBCFS_TYPE, 106, IOCTL_CONFIG_SIZE)

Kernel IOCTL Handling

/* api-ni.c will be modified to handle adding a UDSP */

int

LNetCtl(unsigned int cmd, void *arg)

{

...

case IOC_LIBCFS_ADD_UDSP: {

struct lnet_ioctl_config_udsp *config_udsp = arg;

mutex_lock(&the_lnet.ln_api_mutex);

/*

* add and do initial flattening of the UDSP into

* internal structures

*/

rc = lnet_add_and_flatten_udsp(config_udsp);

mutex_unlock(&the_lnet.ln_api_mutex);

return rc;

}

case IOC_LIBCFS_GET_UDSP: {

struct lnet_ioctl_config_udsp *get_udsp = arg;

mutex_lock(&the_lnet.ln_api_mutex);

/*

* get the udsp at index provided. Return -ENOENT if

* no more UDSPs to get

*/

rc = lnet_add_udsp(get_udsp, get_udsp->idx);

mutex_unlock(&the_lnet.ln_api_mutex);

return rc

}

...

}

Kernel Selection Algorithm Modifications

/*

* When a UDSP rule associates local NIs with remote NIs, the list of local NIs NIDs

* is flattened to a list in the associated peer_NI. When selecting a peer NI, the

* peer NI with the corresponding preferred local NI is selected.

*/

bool

lnet_peer_is_pref_nid_locked(struct lnet_peer_ni *lpni, lnet_nid_t nid)

{

...

}

static struct lnet_peer_ni *

lnet_select_peer_ni(struct lnet_send_data *sd, struct lnet_peer *peer,

struct lnet_peer_net *peer_net)

{

...

ni_is_pref = lnet_peer_is_pref_nid_locked(lpni, best_ni->ni_nid);

lpni_prio = lpni->lpni_priority;

if (lpni_healthv < best_lpni_healthv)

continue;

/*

* select the NI with the highest priority.

*/

else if lpni_prio > best_lpni_prio)

continue;

else if (lpni_prio < best_lpni_prio)

best_lpni_prio = lpni_prio;

/*

* select the NI which has the best_ni's NID in its preferred list

*/

else if (!preferred && ni_is_pref)

preferred = true;

...

}

static struct lnet_ni *

lnet_get_best_ni(struct lnet_net *local_net, struct lnet_ni *best_ni,

struct lnet_peer *peer, struct lnet_peer_net *peer_net,

int md_cpt)

{

...

ni_prio = ni->ni_priority;

if (ni_fatal) {

continue;

} else if (ni_healthv < best_healthv) {

continue;

} else if (ni_healthv > best_healthv) {

best_healthv = ni_healthv;

if (distance < shortest_distance)

shortest_distance = distance;

/*

* if this NI is lower in priority than the one already set then discard it

* otherwise use it and set the best prioirty so far to this NI's.

*/

} else if ni_prio > best_ni_prio) {

continue;

} else if (ni_prio < best_ni_prio)

best_ni_prio = ni_prio;

}

...

}

Rule Structure

Selection policy rules are comprised of two parts:

- The matching rule

- The rule action

The matching rule is what's used to match a NID or a network. The action is what's applied when the rule is matched.

A rule can be uniquely identified using the matching rule or an internal ID which assigned by the LNet module when a rule is added and returned to the user space when they are returned as a result of a show command.

Structures

/* This is a common structure which describes an expression */

struct lnet_match_expr {

};

struct lnet_selection_descriptor {

enum selection_type lsd_type;

char *lsd_pattern1;

char *lsd_pattern2;

union {

__u32 lsda_priority;

} lsd_action_u;

};

/*

* lustre_lnet_add_selection

* Delete the peer NIDs. If all peer NIDs of a peer are deleted

* then the peer is deleted

*

* selection - describes the selection policy rule

* seq_no - sequence number of the command

* err_rc - YAML structure of the resultant return code

*/

int lustre_lnet_add_selection(struct selection_descriptor *selection, int seq_no, struct cYAML **er_rc);

cfg-100, cfg-105, cfg-110, cfg-115, cfg-120, cfg-125, cfg-130, cfg-135, cfg-140, cfg-160, cfg-165

lnetctl Interface

|

YAML Syntax

Each selection rule will translate into a separate IOCLT to the kernel.

|

Flattening rules

Rules will have a serialize and deserialize APIs. The serialize API will flatten the rules into a contiguous buffer that will be sent to the kernel. On the kernel side the rules will be deserialzed to be stored and queried. When the userspace queries the rules, the rules are serialized and sent up to user space, which deserializes it and prints it in a YAML format.

Selection Policies

There are four different types of rules that this HLD will address:

- LNet Network priority rule

- This rule assigns a priority to a network. During selection the network with the highest priority is preferred.

- Local NID rule

- This rule assigns a priority to a local NID within an LNet network. This NID is preferred during selection.

- Remote NID rule

- This rule assigns a priority to a remote NID within an LNet network. This NID is preferred during selection

- Peer-to-peer rules

- This rule associates local NIs with peer NIs. When selecting a peer NI to send to the one associated with the selected local NI is preferred.

These rules are applied differently in the kernel.

The Network priority rule results in a priority value in the struct lnet_net to be set to the one defined in the rule. The local NID rule results in a priority value in the struct lnet_ni to be set to the one defined in the rule. The remote NID rule results in a priority value in the struct lnet_peer_ni to be set to the one defined in the rule. The infrastructure for peer-to-peer rules is implemented via a list of preferred NIDs kept in the struct lnet_peer_ni structure. Once the local network/best NI are already selected, we go through all the peer NIs on the same network and prefer the peer NI which has the best NIs NID on its preferred list. Thereby, preferring a specific pathway between the node and the peer.

Each of these rules impacts a different part of the selection algorithm. The Network rule impacts the selection of the local network. Local NID rules impacts the selection of the best NI to send out of from the preferred network. Remote NID and peer-to-peer rules both impact the peer NI to send to.

It is possible to use both the local NID rule and the peer-to-peer rule to force messages to always take a specific path. For example, assuming a node with three interfaces 10.10.10.3, 10.10.10.4 and 10.10.10.5 and two rules as follows:

selection:

- type: nid

local: true

nid: 10.10.10.5

priority: 0

selection:

- type: peer

local: 10.10.10.5

remote: 10.10.10.6

priority: 0

These two rules will always prefer sending messages from 10.10.10.5 to 10.10.10.6. As opposed to only sending it occasionally when the 10.01.10.5 interface is selected every third message assuming round robin.

As another example, it is also possible to prioritize a set of local and remote NIs so that they are always preferred. Assuming two peers

- PeerA: 10.10.10.2, 10.10.10.3 and 10.10.30.2

- PeerB: 10.10.10.4, 10.10.10.5 and 10.10.30.3

We can setup the following rules:

selection:

- type: nid

local: true

nid: 10.10.10.*

priority: 0

selection:

- type: nid

local: false

nid: 10.10.10.*

priority: 0

The following rules will always prefer messages to be sent between the 10.10.10.* interfaces, rather than the 10.10.30.* interfaces.

The question to answer is if such restrictions generally useful? One use case for such rules is while debugging or characterizing the network. Another argument is that the clusters that use lustre are so diverse that allowing them flexibility over traffic control is a benefit for them, as long as the default behavior is optimal out of the box.

The following section attempts to outline some real life scenario where these rules can be used.

Use Cases

Preferred Network

If a node can be reached on two LNet networks, it is sometimes desirable to designate a fail-over network. Currently in lustre there is the concept of High Availability (HA) which allows servicenode nids to be defined as described in the lustre manual section 11.2. By using the syntax described in that section, two nids to the same peer can also be defined. However, this approach suffers from the current limitation in the lustre software, where the NIDs are exposed to layers above LNet. It is ideal to keep network failures handling contained within LNet and only let lustre worry about defining HA.

Given this it is desirable to have two LNet networks defined on a node, each could have multiple interfaces. Then have a way to tell LNet to always use one network until it is no longer available, IE: all interfaces in that network are down.

In this manner we separate the functionality of defining fail-over pairs from defining fail-over networks.

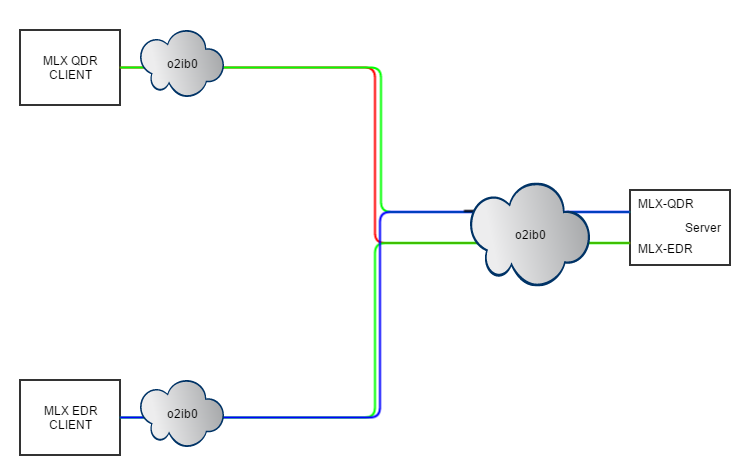

Preferred NIDs

In a scenario where servers are being upgraded with new interfaces to be used in Multi-Rail, it's possible to add interfaces, for example MLX-EDR interfaces to the server. The user might want to continue making the existing QDR clients use the QDR interface, while new clients can use the EDR interface or even both interfaces. By specifying rules on the clients that prefer a specific interface this behavior can be achieved.

Preferred local/remote NID pairs

This is a finer tuned method of specifying an exact path, by not only specifying a priority to a local interface or a remote interface, but by specifying concrete pairs of interfaces that are most preferred. A peer interface can be associated with multiple local interfaces if necessary, to have a N:1 relationship between local interfaces and remote interfaces.

Refer to Olaf's LUG 2016/LAD 2016 PPT for more context.

DLC APIs

The DLC library will provide the outlined APIs to expose a way to create, delete and show rules.

Once rules are created and stored in the kernel, they are assigned an ID. This ID is returned and shown in the show command, which dumps the rules. This ID can be referenced later to delete a rule. The process is described in more details below.

/* * lustre_lnet_add_net_sel_pol * Add a net selection policy. If there already exists a * policy for this net it will be updated. * net - Network for the selection policy * priority - priority of the rule */ int lustre_lnet_add_net_sel_pol(char *net, int priority); /* * lustre_lnet_del_net_sel_pol * Delete a net selection policy. * net - Network for the selection policy * id - [OPTIONAL] ID of the policy. This can be retrieved via a show command. */ int lustre_lnet_del_net_sel_pol(char *net, int id); /* * lustre_lnet_show_net_sel_pol * Show configured net selection policies. * net - filter on the net provided. */ int lustre_lnet_show_net_sel_pol(char *net); /* * lustre_lnet_add_nid_sel_pol * Add a nid selection policy. If there already exists a * policy for this nid it will be updated. NIDs can be either * local NIDs or remote NIDs. * nid - NID for the selection policy * local - is this a local NID * priority - priority of the rule */ int lustre_lnet_add_nid_sel_pol(char *nid, bool local, int priority); /* * lustre_lnet_del_nid_sel_pol * Delete a nid selection policy. * nid - NID for the selection policy * local - is this a local NID * id - [OPTIONAL] ID of the policy. This can be retrieved via a show command. */ int lustre_lnet_del_nid_sel_pol(char *nid, int id); /* * lustre_lnet_show_nid_sel_pol * Show configured nid selection policies. * nid - filter on the NID provided. */ int lustre_lnet_show_nid_sel_pol(char *nid); /* * lustre_lnet_add_nid_sel_pol * Add a peer to peer selection policy. If there already exists a * policy for the pair it will be updated. * src_nid - source NID * dst_nid - destination NID * priority - priority of the rule */ int lustre_lnet_add_peer_sel_pol(char *src_nid, char *dst_nid, int priority); /* * lustre_lnet_del_peer_sel_pol * Delete a peer to peer selection policy. * src_nid - source NID * dst_nid - destination NID * id - [OPTIONAL] ID of the policy. This can be retrieved via a show command. */ int lustre_lnet_del_peer_sel_pol(char *src_nid, char *dst_nid, int id); /* * lustre_lnet_show_peer_sel_pol * Show peer to peer selection policies. * src_nid - [OPTIONAL] source NID. If provided the output will be filtered * on this value. * dst_nid - [OPTIONAL] destination NID. If provided the output will be filtered * on this value. */ int lustre_lnet_show_peer_sel_pol(char *src_nid, char *dst_nid);

Data structures

User space/Kernel space Data structures

/*

* describes a network:

* nw_id: can be the base network name, ex: o2ib or a full network id, ex: o2ib3.

* nw_expr: an expression to describe the variable part of the network ID

* ex: tcp* - all tcp networks

* ex: tcp[1-5] - resolves to tcp1, tcp2, tcp3, tcp4 and tcp5.

*/

struct lustre_lnet_network_descr {

__u32 nw_id;

struct cfs_expr_list *nw_expr;

};

/*

* lustre_lnet_network_rule

* network rule

* nwr_link - link on rule list

* nwr_descr - network descriptor

* nwr_priority - priority of the rule.

* nwr_id - ID of the rule assigned while deserializing if not already assigned.

*/

struct lustre_lnet_network_rule {

struct list_head nwr_link;

struct lustre_lnet_network_descr nwr_descr;

__u32 nwr_priority;

__u32 nwr_id

};

/*

* lustre_lnet_nid_range_descr

* nidr_expr - expression describing the IP part of the NID

* nidr_nw - a description of the network

*/

struct lustre_lnet_nidr_range_descr {

struct list_head nidr_expr;

struct lustre_lnet_network_descr nidr_nw;

};

/*

* lustre_lnet_nidr_range_rule

* Rule for the nid range.

* nidr_link - link on the rule list

* nidr_descr - descriptor of the nid range

* priority - priority of the rule

*/

struct lustre_lnet_nidr_range_rule {

struct list_head nidr_link;

struct lustre_lnet_nidr_range_descr nidr_descr;

int nidr_priority;

bool nidr_local;

};

/*

* lustre_lnet_p2p_rule

* Rule for the peer to peer.

* p2p_link - link on the rule list

* p2p_src_descr - source nid range

* p2p_dst_descr - destination nid range

* priority - priority of the rule

*/

struct lustre_lnet_p2p_rule {

struct list_head p2p_link;

struct lustre_lnet_nidr_range_descr p2p_src_descr;

struct lustre_lnet_nidr_range_descr p2p_dst_descr;

int priority;

};

IOCTL Data structures

enum lnet_sel_rule_type {

LNET_SEL_RULE_NET = 0,

LNET_SEL_RULE_NID,

LNET_SEL_RULE_P2P

};

struct lnet_expr {

__u32 ex_lo;

__u32 ex_hi;

__u32 ex_stride;

};

struct lnet_net_descr {

__u32 nsd_net_id;

struct lnet_expr nsd_expr;

};

struct lnet_nid_descr {

struct lnet_expr nir_ip[4];

struct lnet_net_descr nir_net;

};

struct lnet_ioctl_net_rule {

struct lnet_net_descr nsr_descr

__u32 nsr_prio;

__u32 nsr_id

};

struct lnet_ioctl_nid_rule {

struct lnet_nid_descr nir_descr;

__32 nir_prio;

__u32 nir_id;

bool nir_local;

};

sturct lnet_ioctl_net_p2p_rule {

struct lnet_nid_descr p2p_src_descr;

struct lnet_nid_descr p2p_dst_descr;

__u32 p2p_prio;

__u32 p2p_id;

};

/*

* lnet_ioctl_rule_blk

* describes a set of rules of the same type to transfer to the kernel.

* rule_hdr - header information describing the total size of the transfer

* rule_type - type of rules included

* rule_size - size of each individual rule. Can be used to check backwards compatibility

* rule_count - number of rules included in the bulk.

* rule_bulk - pointer to the user space allocated memory containing the rules.

*/

struct lnet_ioctl_rule_blk {

struct libcfs_ioctl_hdr rule_hdr;

enum lnet_sel_rule_type rule_type;

__u32 rule_size;

__u32 rule_count;

void __user *rule_bulk;

};

Serialization/Deserialization

Both userspace and kernel space are going to store the rules in the data structures described above. However once userspace has parsed and stored the rules it'll need to serialize it and send it to the kernel.

The serialization process will use the IOCTL datastructures defined above. The process itself is straightforward. The rules as stored in the user space or the kernel are in a linked list. But each rule is of deterministic size and form. For example an IP is described as four struct cfs_range_expr structures. This can be translated into four struct lnet_expr structures.

As an example a serialized list of net rules are going to look as follows:

The rest of the rules will look very similar as above, except that the list of rules included in the memory pointed to by rule_bulk is going to contain the pertinent structure format.

On the receiving end the process is reversed to rebuild the linked lists.

Common functions that can be called from user space and kernel space will be created to serialize and deserialize the rules:

/* * lnet_sel_rule_serialize() * Serialize the rules pointed to by rules into the memory block that is provided. In order for this * API to work in both Kernel and User space the bulk pointer needs to be passed in. When this API * is called in the kernel, it is expected that the bulk memory is allocated in userspace. This API * is intended to be called from the kernel to serialize the rules before sending it to user space * rules [IN] - rules to be serialized * rule_type [IN] - rule type to be serialized * bulk_size [IN] - size of memory allocated. * bulk [OUT] - allocated block of memory where the serialized rules are stored. */ int lnet_sel_rule_serialize(struct list_head *rules, enum lnet_sel_rule_type rule_type, __u32 *bulk_size, void __user *bulk); /* * lnet_sel_rule_deserialize() * Given a bulk of rule_type rules, deserialize and append rules to the linked * list passed in. Each rule is assigned an ID > 0 if an ID is not already assigned * bulk [IN] - memory block containing serialized rules * bulk_size [IN] - size of bulk memory block * rule_type [IN] - type of rule to deserialize * rules [OUT] - linked list to append the deserialized rules to */ int lnet_sel_rule_deserialize(void __user *bulk, __u32_bulk_size, enum lnet_sel_rule_type rule_type, struct list_head *rules);

Policy IOCTL Handling

Three new IOCTLs will need to be added: IOC_LIBCFS_ADD_RULES, IOC_LIBCFS_DEL_RULES, and IOC_LIBCFS_GET_RULES.

IOC_LIBCFS_ADD_RULES

The handler for the IOC_LIBCFS_ADD_RULES will perform the following operations:

- call

lnet_sel_rule_deserialize() - Iterate through all the local networks and apply the rules

- Iterate through all the peers and apply the rules.

- splice the new list with the existing rules in the process resolving any conflicts. New rules always trump old rules (no pun intended).

Application of the rules will be done under api_mutex_lock and the exclusive lnet_net_lock to avoid having the peer or local net lists changed while the rules are being applied.

There will be different lists one for each rule type. The rules are iterated and applied whenever:

- A local network interface is added.

- A remote peer/peer_net/peer_ni is added

IOC_LIBCFS_DEL_RULES

The handler for IOC_LIBCFS_DEL_RULES will delete the rules which the ID of the rule passed in or if no ID is passed in then the exact rule is matched.

There will be no other actions taken on rule removal. Once the rule has been applied it will remain applied until the objects it has been applied to are removed.

IOC_LIBCFS_GET_RULES

The handler for the IOC_LIBCFS_GET_RULES will call lnet_sel_rule_serialize() on the master linked list for the type of the rule identified in struct lnet_ioctl_rule_bulk.

It fills as many rules as can fit in the bulk by examining the result of (rule_hdr.ioc_len - sizeof(struct lnet_ioctl_rule_blk)) / rule_size . That number of rules are serialized and placed in the bulk memory block. The IOCTL returns ENOSPC if the given bulk memory block is not enough to hold all the rules. It assigns the number of rules serialized in rule_count. The userspace process can make another call with the number of rules to skip set in rule_count. The handler will skip that indicated number of rules and fill the new bulk memory with the remaining rules. This process can be repeated until all the rules are returned to userspace.

In userpsace the rules are printed in the same YAML format as they are parsed in.

Policy Application

Net Rule

The net which matches the rule will be assigned the priority defined in the rule.

NID Rule

If the local flag is set then attempt to match the local_nis otherwise attempt to match the peer_nis. The NI matched shall be assigned the priority defined in the rule.

Peer to Peer Rule

NIDs for local_nis matching the source NID pattern in the peer to peer rule will be added to a list on the peer_nis which NID match the destination NID pattern.

Selection Algorithm Integration

Currently the selection algorithm performs its job in the following general steps:

- determine the best network to communicate to the destination peer by looking at all the LNet networks the peer is on.

- select the network with the highest priority

- for each selected network go through all the local NIs and keep track of the best_ni based on:

- It's priority

- NUMA distance

- available credits

- round robin

- Skip any networks which are lower priority than the "active" one. If there are multiple networks with the same priority then the best_ni is selected from amongst them using the above criteria.

- Once the best_ni has been selected, select the best peer_ni available by going through the list of the peer_nis on the selected network. Select the peer_ni based on:

- The priority of the peer_ni.

- if the NID of the best_ni is on the preferred local NID list of the peer_ni. It is placed there through the application of the peer to peer rules.

- available credits

- round robin

Misc

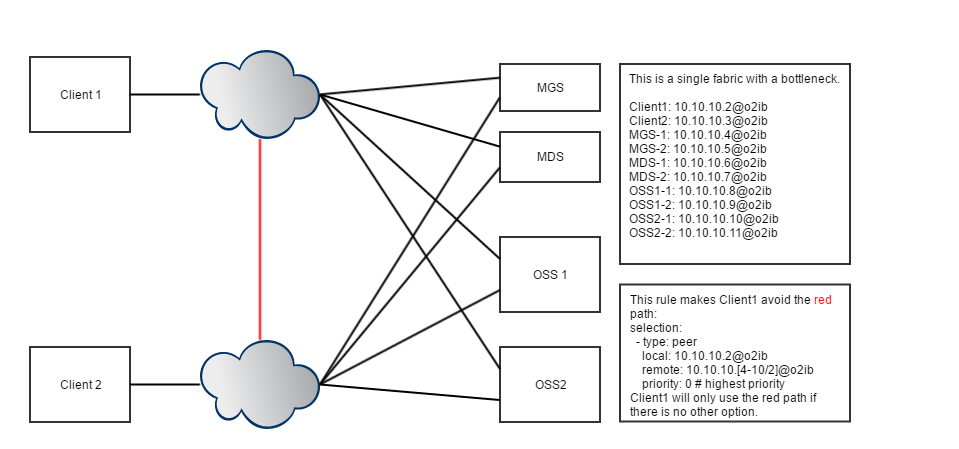

As an example, in a dragonfly topology as diagrammed below, a node can have multiple interfaces on the same network, but some interfaces are not optimized to go directly to the destination group. So if the selection algorithm is operating without any rules, it could select a local interface which is less than optimal.

The clouds in the diagram below represents a group of LNet nodes on the o2ib network. The admin should know which node interfaces resolve to a direct path to the destination group. Therefore, giving priority for a local NID within a network is a way to ensure that messages always prefer the optimized paths.

The diagram above was inspired by: https://www.ece.tufts.edu/~karen/classes/final_presentation/Dragonfly_Topology_Long.pptx

Refer to the above power point for further discussion on the dragon-fly topology.

Example

TBD: I'm thinking in a topology such as the one represented above, the sys admin would configure the routing properly, such that messages heading to a particular IP destination on a different group would get routed to the correct edge router, and from there to the destination group. When LNet is layered on top of this topology there will be no need to explicitly specify a rule, as all necessary routing rules will be defined in the routing tables of the kernel. The assumption here is that Infinitband IB over IP would obey the standard linux routing rules.