Current versions of Lustre rely on a single active metadata server. Metadata throughput may be a bottleneck for large sites with many thousands of nodes. System architects typically resolve metadata throughput performance issues by deploying the MDS on faster hardware. However, this approach is not a panacea. Today, faster hardware means more cores instead of faster cores, and Lustre has a number SMP scalability issues while running on servers with many CPUs. Scaling across multiple CPU sockets is a real problem: experience has shown in some cases performance regresses when the number of CPU cores is high.

Over the last few years a number of ideas have been developed to enable Lustre to effectively exploit multiple CPUs. The purpose of this project is to take these ideas past the prototype stage and into production. These features will be benchmarked to quantify the scalability improvement of Lustre on a single metadata server. After review, the code will be committed to the Master branch of Lustre.

Solution Architecture For SMP node affinity contains definitions that the reader may find provide helpful background.

A CPU partition (CPT) is conceptually similar to the 'cpuset' of Linux. However, in Project Apus, the CPU partition is designed to provide a portable abstract layer that can be conveniently used by kernel threads, unlike the 'cpuset' that is only accessible from userspace. To achieve this a new CPU partition library will be implemented in libcfs.

CPT table is a set of CPTs:

Linux kernel has interfaces for NUMA allocators (kmalloc_node). MDS may be configured with a partition that contains more than one node. To support this case, Project Apus NUMA allocator will be able to spread allocations over different nodes in one partition.

LNet is an async message library. Message completion events are delivered to upper layer via Event Queue (EQ) either by callback or by Polling event. Lustre kernel modules reply on EQ callbacks of LNet to get completion event.

There are two types of buffers for passive messages:

Server side of Lustre service, each service contains these key components:

None.

struct cfs_cpt_table *cfs_cpt_table_alloc(int nparts)Allocate an empty CPT-table which with @nparts partitions. Returned CPT table is not ready to use, caller need to setup CPUs in each partition by cfs_cpt_table_set_*().

cfs_cpt_table_free(struct cfs_cpt_table *ctab)Release CPT-table @ctab

cfs_cpt_set/unset_cpu(struct cfs_cpt_table *ctab, int cpt, int cpu)Add/remove @cpu to/from partition @cpt of CPT-table @ctab

int cfs_cpt_set/unset_cpumask(struct cfs_cpt_table *ctab, int cpt, cpumask_t *mask)Add/remove all CPUs in @mask to/from partition @cpt of CPT-table @ctab

void cfs_cpt_set/unset_node(cfs_cpt_table_t *ctab, int cpt, int node)Add/remove all CPUs in NUMA node @node to/from partition @cpt of CPT table @ctab

int cfs_cpt_current(struct cfs_cpt_table_t *ctab, int remap)Map current CPU to partition ID of CPT-table @ctab and return. If current CPU is not set in @ctab, it will hash current CPU to partition ID of @ctab if @remap is true, otherwise it just return -1.

struct cfs_cpt_table *cfs_cpt_table_create(int nparts)Create a CPT table with total @nparts partitions, and evenly distribute all online CPUs to each partition.

struct cfs_cpt_table *cfs_cpt_table_create_pattern(char *pattern)Create a CPT table based on string pattern @pattern, more details can be found in “Use cases”.

Percpt-lock is similar with percpu lock in Linux kernel, it’s a lock-array and each CPT has a private-lock (entry) in the lock-array. Percpt-lock is non-sleeping lock.

While locking a private-lock of percpt-lock (one entry of the lock-array), user is allowed to read & write data belonging to partition of that private-lock, also user can read global data protected by the percpt-lock. User can modify global data only if he locked all private-locks (all entries in the lock-array).

struct cfs_percpt_lock *cfs_percpt_lock_alloc/free(struct cfs_cpt_table *ctab)Create/destroy a percpt-lock.

cfs_percpt_lock/unlock(struct cfs_percpt_lock *cpt_lock, int index)Lock/unlock CPT @index if @index >= 0 and @index < (number of CPTs), otherwise it will exclusively lock/unlock all private-locks in @cpt_lock.

void *cfs_cpt_malloc(struct cfs_cpt_table_t *ctab , int cpt, size_t size, unsigned gfp_flags)Allocate memory size of @size in partition @cpt of CPT-table @ctab. If partition @cpt covered more than one node, then it will evenly spread the allocation to any of those nodes.

void *cfs_cpt_vmalloc(struct cfs_cpt_table_t *ctab, int cpt, size_t size)Vmalloc memory size of @size in partition @cpt of CPT table @ctab. Same as above, if partition @cpt covered more than one node, then it will evenly spread the allocation to any of those nodes.

cfs_page_t *cfs_page_cpt_alloc(struct cfs_cpt_table_t *ctab, int cpt, unsigned gfp_flags)Allocate a page in partition @cpt of CPT table @ctab. If partition @cpt covered more than one node, then it will evenly spread the allocation to any of those nodes.

cfs_cpt_bind(struct cfs_cpt_table_t *ctab, int cpt)Change CPU affinity of current thread, migrate it to CPUs in partition @cpt of CPT table @ctab. If @cpt is negative then current thread allow to run on all CPUs in @ctab. If there is only one CPT and it covered all online CPUs, then no affinity is set.

Ptlrpc_init_svc(struct cfs_cpt_table *ctab, int *cpts, int ncpt, …)We add three new parameters to ptlrpc_init_svc()

cfs_cpt_table_create_pattern(char *pattern)For example:

char *pattern="0[0-3] 1[4-7] 2[8-11] 3[12-15]" |

cfs_cpt_table_create_pattern() will create a CPT table with four partitions, the first partition contains CPU[0-3], the second contains CPU[4-7], the third contains CPU[8-11] and the last contains CPU[12-15].

Libcfs will create a global CPT-table (cfs_cpt_table) during module load phase. This CPT-table will be referred by all Lustre modules.

If user is using string pattern to create CP- table, there is no special code path and the CPT table will be created as required.

If user wants to create CPT table with number of partitions as parameter, then the steps are:

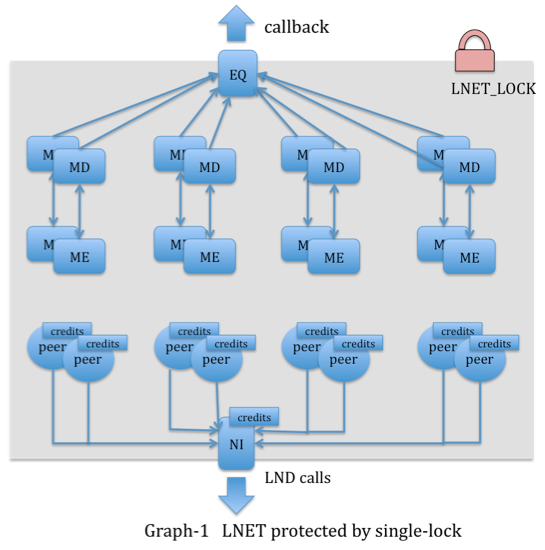

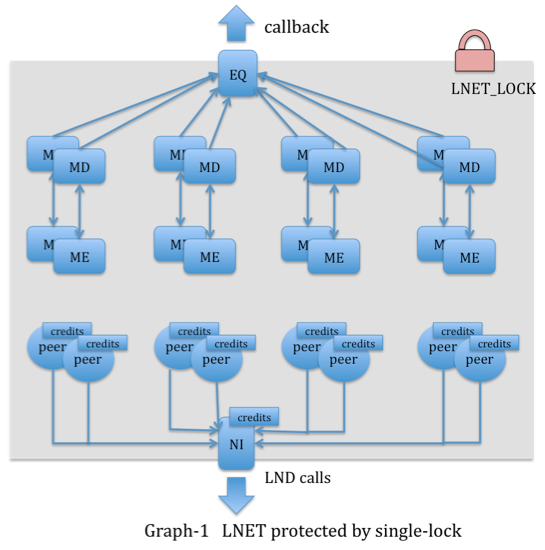

Current LNet is protected by a global spinlock LNET_LOCK (Graph-1):

This global spinlock became to a significant performance bottleneck on fat CPU machine. Specific issues with the spinlock include:

To improve this situation the global LNET_LOCK will be replaced with two percpt-locks (Graph-2):

As described above, EQ callback will be protected by private lock of percpt-lock instead of a global lock:

As described in previous sections:

By default, number of LND threads equals to number of hyper-threads (HTs) on a system. This number is excessive if there are tens or hundreds of cores with HTs but few network interfaces. Such configuration will potentially hurt performance because of lock contentions and unnecessary context switching.

In this project, the number of LND threads will rely on number of CPTs instead of CPU cores (or HTs). Additional threads will only be created if more than one network interfaces (hardware network interface, not LNet interface) is available.

We will set CPT affinity for LND threads by default (bind threads on CPU partition).

ptlrpc_service will be restructured. Many global resources of service will be changed to CPT resources:

By default, these resources will be allocated on all CPTs of global cfs_cpt_table for those most common used services of MDT and OST. However, it is not possible to allocate any number of CPTs to a service in the future (see "Initializer of ptlrpc service" in "Functional specification").

Graph-3 shows partitioned Lustre stack, although we expect all requests will be localized in one partition (Green lines), but it’s still possible that there are some cross-partition request (Red line):

With these cases, LND thread will steal buffers from other partitions and deliver cross-partition requests to service threads. This will degrade performance because inter-CPU data adds unnecessary overhead, but it is expected to be an improvement on the current situation because we can benefit from finer-grain locking in LNet and ptlrpc service. Locking in LNet and ptlrpc is judged to be one of the biggest SMP performance issue in Lustre.

There are other thread-pools which have will benefit from attention. For example, libcfs workitem schedulers (for non-blocking rehash), ptlrpcd, ptlrpc reply handler. Currently, all these thread-pools will create thread for each hyper-thread on the system. This behavior is sub-optimal because HTs are simply logic processors and because threads in different modules could be scheduled simultaneously.

With Project Apus thread numbers will be based on both number of CPU cores and CPU partitions, and decrease overall threads in Lustre.

Define configurable parameters available in the feature.

By default, libcfs will create a global CPT-table (cfs_cpt_table) that can be referred by all other Lustre modules. This CPT table will cover all online CPUs on the system, number of CPTs (NCPT) is:

For example, on 32 cores system libcfs will create 4 CPTs, and 64 cores system it will create 8 CPTs.

User also can define CPT-table by these two ways:

npartitions=NUMBERCreate a CPT-table with specified number of CPTs, all online CPUs will be evenly distributed into CPTs.

If npartitions is set to "1", it will turn off all CPU node affinity features.

cpu_patterns=STRING, examples:

libcfs will create 4 CPTs and: CPT0 contains CPU0 and CPU4, CPT1 contains CPU1 and CPU5, CPT2 contains CPU2 and CPU6, CPT3 contains CPU3 and CPU7. |

libcfs will create 4 CPTs and: CPT0 contains all CPUs in NUMA node[0-3] CPT1 contains all CPUs in NUMA node[4-7] CPT2 contains all CPUs in NUMA node[8-11] CPT3 contains all CPUs in NUMA node[12-15] |

cpu_patterns is provided, then npartitions will be ignoredcpu_patterns does not have to cover all online CPUs. This has the effect that the user can make most Lustre server threads execute on a subset of all CPUs.By default, LND connections for each NI will be hashed to different CPTs by NID, LND schedulers on different CPTs can process messages for a same NI. However, a user can bind LNet interface on specified CPT with:

Lnet networks="o2ib0:0(ib0) o2ib1:1(ib1)" |

The number after colon is CPT index. If user specified this number of a LNet NI, then all connections on that NI will only attached on LND schedulers running on that CPT. This feature could benefit NUMA IO performance in the future.

In previous example, all ib connections for o2ib0 will be scheduled on CPT-0, all ib connections for o2ib1 will only be scheduled on CPT-1.

Int LNetEQAlloc(unsigned int count, lnet_eq_handler_t callback, …)Providing both @count and @callback at the same time is discouraged. @count is only for LNetEQPoll and enqueue/dequeue for polling will be serialized by a spinlock which can hurt performance. A better mechanism in SMP environments is to set @count to 0 receive events from @callback.

LNetMEAttach(unsigned int portal, …, lnet_ins_pos_t pos, lnet_handle_me_t *handle);@pos can take a new value: LNET_INS_AFTER_CPU. This has the effect that ME will be attached on local CPU partition only (the CPU partition that calling thread is running on). If @pos is LNET_INS_AFTER, then ME will always be attached on a same partition no matter which partition the caller is running on. In this mode, all LND threads will contend buffer on the partition and this is expected to degrade performance on SMP.

Integrating the LNet Dynamic Config feature and this feature together. This project requires to make some changes to initializing/finalizing process of LNet, which might conflict with another project "LNet Dynamic Config".

We expect this project can help on SMP scalability on all systems (MDS, OSS, clients), but we never benchmarked our prototype for non-MDS systems.

This project adds new tunables to system, and it will not easy to test all code paths in our auto tests.