A tree node path for a name entry. The red line in Graph-1 is htree path for name E52.

Single directory performance is critical for HPC workloads. Many applications create a separate output file for each task of a job resulting in hundreds of thousands of files written to a single output directory. Currently, both filename lookup and file system modifying operations such as create and unlink are protected with a single lock for the whole directory. This causes MDS threads to block for the one thread holding the directory lock, which may be waiting for disk IO to complete (e.g. reading a directory block from disk, or waiting for enough space in the journal).

This document is high-level design for a parallel locking mechanism for single ldiskfs directories. The current lock for a single directory can only be held by one thread at any time. The new parallel locking mechanism will allow multiple threads to lookup, create and unlink operations simultaneously.

This section is just taken from our “Parallel Directory solution architecture”

Ldiskfs uses a hashed-btree (htree) to organize and locate directory entries, which is protected by a single mutex lock. The single lock protection strategy is simple to understand and implement, but is also a performance bottleneck because directory operations must obtain and hold the lock for their duration. The Parallel Directory Operations (PDO) project implements a new locking mechanism that ensures it is safe for multiple threads to concurrently search and/or modify directory entries in the htree. PDO means MDS and OSS service threads can process multiple create, lookup, and unlink requests in parallel for the shared directory. Users will see performance improvement for these commonly performed operations.

It should be noted that the benefit of PDO may not be visible to applications if the files being accessed are striped across many OSTs. In this case, the performance bottleneck may be with the MDS accessing the many OSTs, and not necessarily the MDS directory.

Htree directories with parallel directory operations will provide optimal performance for large directories. However, within a directory the minimum unit of parallelism is a single directory block (on the order of 50-100 files, depending on filename length). Parallel directory operations will not show performance scaling for modifications within a single directory block, but should not degrade in performance.

In order to be practically useful, any new locking mechanism should should maintain or reduce resource consumption compared to the previous mechanism. To measure this, performance of PDO with a single application thread should be similar to that of the previous mechanism.

The existing htree implementation is well tested and in common usage. To avoid deviating from this state, it is not desirable to significantly restructure the htree implementation of ldiskfs. Ideally, the new lock implementation would be completely external to ldiskfs. In reality, this is not possible, but a maintainable PDO implementation will minimize in-line ldiskfs changes and maximize ease of maintenance. There is relatively little interest to accept this change into the upstream ext4 codebase because it is only useful for a workload that is very uncommon for normal servers (hundreds or thousands of threads operating in the same directory).

ldiskfs filesystem is backend filesystem of Lustre. ldiskfs is an enhanced version of ext4.

Hashed-btree is the data structure that is used by ext3/4 and ldiskfs as directory layout. The figure above illustrates a ldiskfs indexed directory stored as a htree.

There are two types and blocks in a indexed directory:

A tree node path for a name entry. The red line in Graph-1 is htree path for name E52.

Numerous operations may be conducted on a on htree:

Entries in htree directory are indexed by a hashed value of the name. An unlikely, but finite possibility exists that any two names do not generate a unique hash-value. This is called hash collision. If these names spread over more than one block, then searching any of these names needs to iterate over all these blocks, and this should be handled correctly for PDO.

htree-lock is an advanced read/write lock. There are two locking operations for htree-lock.

OSD object is a in-memory object that represents a inode of backend filesystem.

None.

This section of the document covers design of htree-lock and how to exploit htree-lock to support parallel directory operations.

The htree-lock lock mechanism can provide multiple level of protection for tree-like data structure.

There are two external data structures for htree-lock:

Two types of locking operation are available: tree-lock and child-lock. Graph-2 shows how they are used to protect htree directory.

To understand htree-lock in practice, let us assume that two threads want to access the same directory simultaneously (figure above):

For a N-level htree, a child-lock can protect level[PUB:N] and level[PUB:N-1] blocks of htree directory. A tree-lock can protect all other level blocks. Using the example presented in the figure above, block-0, block-1 and block-2 are protected by tree-lock.

The tree-lock API is:

htree_lock(htree_lock_t *lock, htree_lock_head_t *head, int mode)@lock: per-thread lock handle.@head: the htree-lock created for the target resource (directory).@mode: locking mode of tree-lock which can be any of EX, PW, PR, CW, CR. It is anticipated that most operations will only take CW and CR so the htree can be accessed concurrently.htree_unlock(htree_lock_t *lock)If a share access mode lock (CW or CR) is obtained for a htree an additional “child lock” may also be obtained and additional protection is gained on portion of the htree:

htree_node_lock(htree_lock_t *lock, int mode, __u32 key, int depth).htree_lock()

@lock: per-thread lock handle.@mode: child lock mode, there are only two locking modes for child lock (PW and PR).@key: ID of the child resource to protect, for example: block-ID.@depth:htree_node_lock(lock, HTREE_LOCK_PR, 3, 0);htree_node_lock(lock, HTREE_LOCK_PR, 6, 1);htree_node_lock(lock, HTREE_LOCK_PW, 9, 1);htree_node_unlock(htree_lock_t *lock, int depth, void *event)@depth,Child-lock advanced features:

htree_node_lock_try(… int wait, …);@wait is false and required lock can not be granted. Otherwise this call will return 1 with hold of the required lock. This feature can be used as a spinlock for some fast operations. This feature may also be helpful for violating locking order to avoid extra locking dance and possible thread context switch.@event_buf. As a result, thread-1 listens on “locking event” of the child-lock it just released. thread-1 will stop listening after calling htree_node_stop_listen().htree_node_stop_listen()). thread-2 has obtained a lock for the same resource @key and sent @event to all listeners on the resource.This feature will provide for complex locking strategies.

|

Thread 1 |

Thread 2 |

1 |

|

|

2 |

…… |

|

3 |

|

|

4 |

|

|

5 |

|

…… |

Buffer least recently used (LRU) is a per-cpu cache of Linux for fast searching of buffers. The default buffer LRU size is eight. Eight is too small for Lustre where supporting N-levels htree for very large directories is desirable. In addition, future Distributed Namespace work will require a larger LRU. If the default buffer size remains at eight, as buffer slots are consumed hot buffers may be excluded from the LRU cache because the LRU cache is unrealistically small. This is scenario will significantly harm performance as buffers required - but not available in the LRU cache - are slow and expensive to find.

A simple kernel patch will be necessary to allow specification of the LRU size. By default, the LRU size to be set to 16.

Obtain EX tree-lock for all changing operations and obtain PR tree-lock for all non-changing operations.

Obtain PR tree-lock. Currently Lustre Distributed Lock Manager (ldlm) will obtain PR lock on the directory. Improving this behavior requires changing ldlm and is out of scope of this project.

Obtain CR tree-lock and obtain PR child-lock for the target DE-block. If a hash-collision occurs, also obtain PR DX-lock for the last level indices-block to ensure searching will be atomic. Additional details on this topic appears in the logic specifications.

Obtain CW htree-lock and obtain PW child-lock for the target DE-block. The PW lock is obtained on the last level DX-block to ensure searching is atomic if there is hash collision.

Obtain CW htree-lock and obtain PW lock for the target DE-block. The PW child-lock is obtained for the last level DX-block if the target entries-block is full and needs to be split.

If the last DX-block is full and a split is required, then re-lock the htree with EX mode and retry. As discussed, this is expected to be sufficiently rare that this overhead can be ignored.

Thousands of server threads may enter the same directory by holding shared lock mode (CW, CR). If compatible locking request are always granted, and there are a few threads acquire incompatible locks (i.e. EX), starvation may occur. A simple solution if presented that will block locking request if:

As discussed, most locking requests require compatible locks. As a result, this scheme is not expected to degrade performance.

Assume thousands of server threads have obtained tree-lock with shared mode. Now assume these threads operate on different child resource (e.g. search on different entries-block). Each thread will acquire a child-lock with different key (block-ID). These child-locks (htree_lock_t) will enqueue to a increasing list if they are simply linked on htree_lock_head_t. As such a list grows, search performance for a given block-ID will degrade.

As a result, new structure name skiplist will replace the list. The figure below illustrates skiplist.

Child-locks protect level[PUB:N-1] DX-block and level[PUB:N] DE-blocks. Other changes are protected by htree-lock. 2-depths child lock are defined as:

The use-cases include:

However, this design has limitations: Assume the tree has only one level DX-block (one DX-block). In this case, all threads always require PR/PW lock on the DX-block. The effect is that htree-lock will behave as a single rw-semaphore and parallel locking benefit is lost.

Assume the tree has two level DX-blocks, and there are a small number of second level DX-blocks. In this case, the effect is a small number of rw-semaphores to protect the htree directory. If many hundred of threads request parallel access to the directory, and only a small number of rw-semaphores are available, only a few threads can enter at any time.

The solution to this problem is add another depth for child-lock:

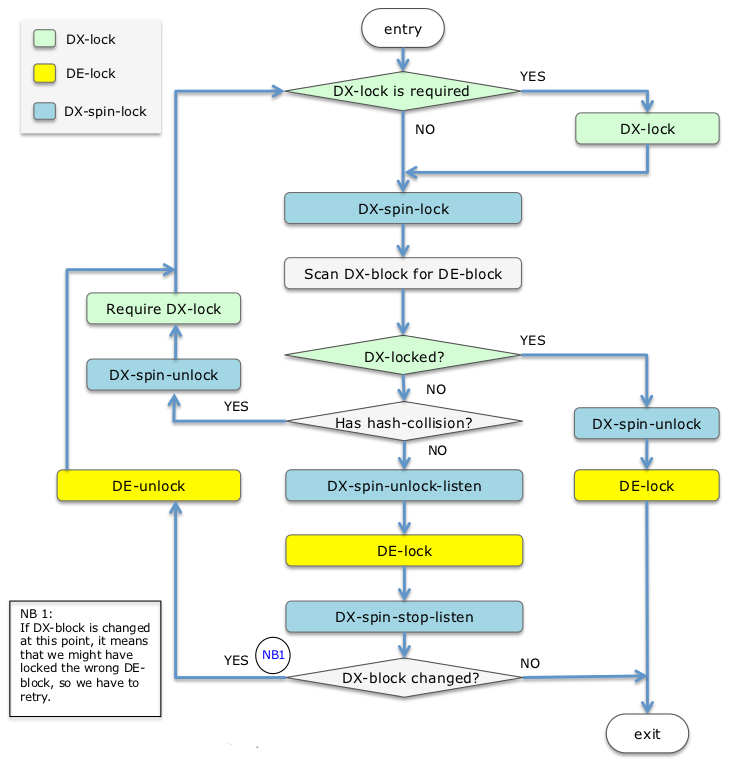

DX-lock and DE-lock are both blocking locks, DX-spinlock is non-blocking lock. After obtaining DX-spinlock it should not be necessary to acquire a DX/DE-lock. The locking order must be: DX-lock, DE-lock, DX-spinlock. All these locks only have two modes: PR and PW.

Probe htree patch is a key functions that must be changed within ldiskfs. The function name is dx_probe(). dx_probe() probes htree-path for a name entry.

In current ldiskfs, this function is only called with hold RW semaphore. RW semaphore only two modes: protected read and protected write.

PDO requires a new implementation to support shared locking mode (CR or CW). While one thread is searching the htree, other threads may simultaneously change the htree. The new dx_probe() must ensure data correctness.

The figure above illustrates the logic using child-locks of htree-lock to protect the process of dx_probe(). The method is:

dx_probe() with hold shared locking mode (CR or CW).This is logic specification for add entry or split DE-block, which is dx_add_entry() of ldiskfs. This function is always called after dx_probe(). The target DE-block will be already locked by DE-lock. To simplify the figure, growing htrees and DX-block split have been omitted.

If the DX-block is full or the tree is full and needs to grow, we just need to lock the whole htree with EX mode and repeat the process of dx_probe() and dx_add_entry().

Removal of name entry (delete_entry) is simple. It is called after after dx_probe() and inherits the locked target DE-block returned by dx_probe(). No changes are necessary to delete_entry().

Htree growing logic is as follows:

(a) and (b) show htree growing from 1-level to 2-level. (c) and (d) illustrate htree growing from N-level to (N+1) level. A new DX-block is added as the only child of DX-block 0 and obtains children of DX-block 0.

A minor difference exists between growing from 1-level to 2-level, and N-level to (N+1)-level:

For example, spliting DE-block[PUB:2] in Graph-6 (a): after the htree grows, the htree will become (b). A new DX-block[PUB:X] will always have free bytes for new DE-block split from DE-block[PUB:2]. In this case a split on DE-block[PUB:2] can be performed and the new DE-block added to DX-block[PUB:X]. Finally, insert name entry to target DE-block.

This situation can be resolve by simply aborting the insert operation after growing the tree and restarting from dx_probe. In the second shot, DX-block[PUB:101] is full but a split can be performed because the new DX-block[PUB:Y] has free entries. In this case, the process is splitting DX-block, splitting DE-block and inserting an entry.

In practice, to simplify the logic, dx_probe() is always restarted after growing of htree.

Htree-lock can provide private fields to store extra information. For example, block-0 (root block of the directory) can be cached. Subsequent threads entering the same directory will not have to search the block again. This will save time and in some cases a slot in buffer LRU cache. This feature is optional as it is unknown what the performance benefit will be in practice and it may increase overhead of single thread use-case.

Define configurable parameters available in the feature.

New module parameter for ldiskfs:

A proc entry “ldiskfs_pdo” will be provided to osd-ldiskfs so user can turn on/off PDO without reloading moudules.

Options for buffer LRU size in kernel config file: 8, 16, 32, default will be 16 instead of 8.

A new parameter @hlock will be added to a couple of exported ldiskfs/ext4 APIs:

int ldiskfs_add_entry(handle_t \*handle, struct dentry \*dentry, struct inode \*inode, htree_lock_t \*hlock); |

struct buffer_head *

ldiskfs_find_entry(struct inode \*dir, const struct qstr \*d_name, struct

ext4_dir_entry_2 \*\* res_dir, htree_lock_t \*hlock);

|

The new parameter is a thread htree-lock handle created in osd-ldiskfs. If these APIs are called from VFS, @hlock should be NULL and ldiskfs will assume that PDO is off and protection comes from semaphore. All operations on @hlock should be NOOP in that case.

N/A

Htree-lock is more expensive lock than regular rw-semaphore or mutex. Single threaded operations will likely have higher overheads while using htree-lock - although multiple threads have better concurrency. It is unlikely to be a significant resource drain because locking overhead is just a tiny part relative to other processing of creating/removing a file over Lustre server stack.