The following is an example to follow to build a Lustre AWS cluster. It will take most of the defaults, for example 4 OSS servers and 2 clients but customization is fairly easy to figure out. At the end you will have a 4 server lustre filesytem with 2 clients that you can then experiment with.

If you already have clients that you would like to mount your new Lustre Filesystem to, see Cloud Edition for Lustre software - Client Setup for instructions on using the ce-configure command to setup your clients for Lustre access.

You will first need an AWS account. Follow the instructions to setup AWS account

You will need an SSH key for ssh access to your cluster. So create a public and private key and place it on your system.

For windows user, you can use puttygen to generate the key. You can also generate a key in AWS and copy the private key back,

In either case, it is highly recommended to use a new key. The example uses a key generated locally (not on AWS).

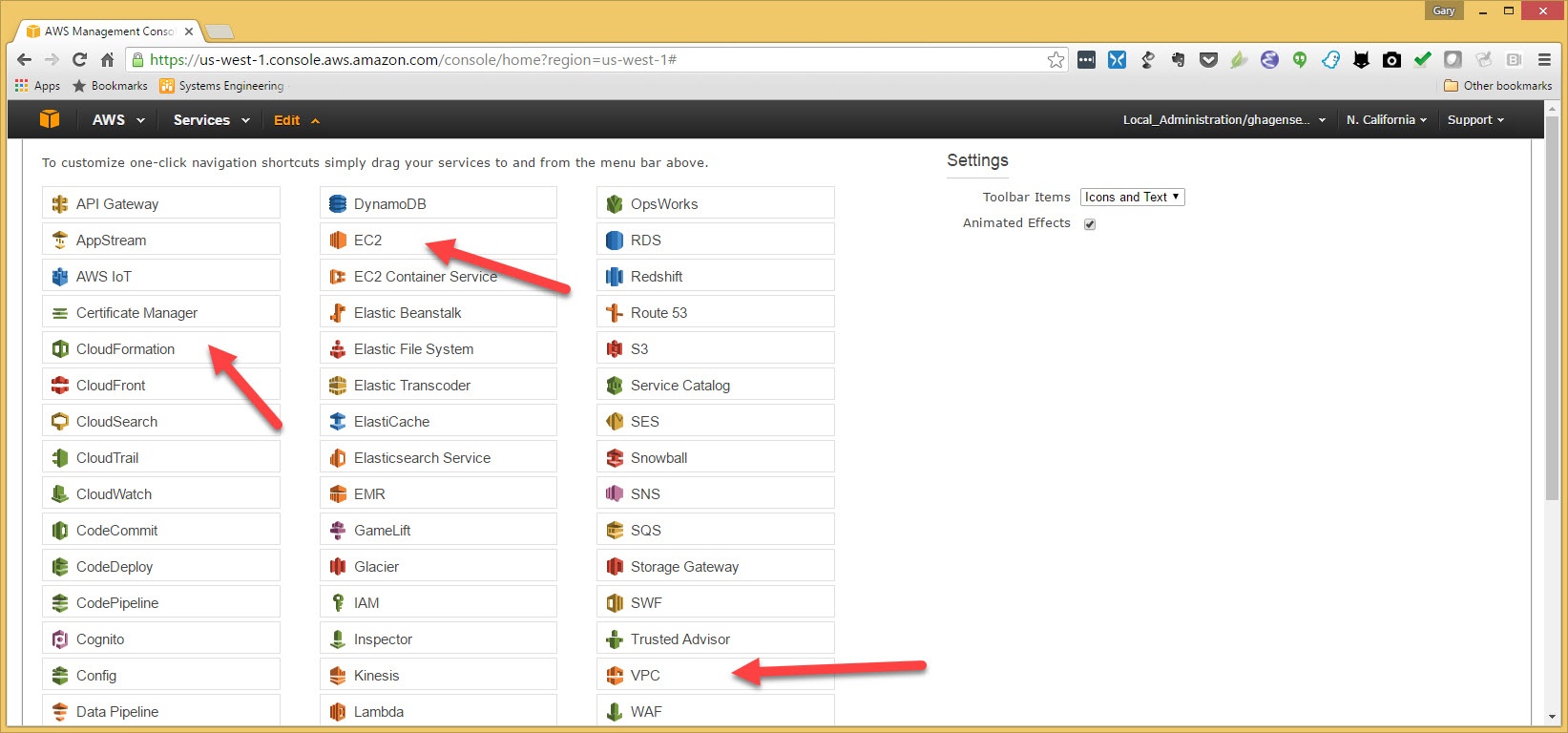

Once you have your access then login and you will see the following screen. To make it easy, hit edit and move some things to the access bar

Hit edit and drag and drop EC2, VPC and Cloud Formation to the access bar (after "services" and before "edit")

Now the access bar will look like the following. The first thing to do will be to create a key pair for accessing our cluster once it is created.

So click on "EC2"

On the EC2 dashboard, look for "Key Pairs" in the left panel under "Network and Security"

Then Chose "Import Keys" and upload an OpenSSH compatible public key. The template we will run later will put this public key in all instances it creates.

You also have the option of creating a key here by choosing "Create Key Pair", but this example will use a key that you generate on your system.

This next screenshot also shows a puttygen window for Windows users. Puttygen does not save an OpenSSH compatible public key so you cannot upload a saved public key.

However if you load your private key into puttygen you can cut an OpenSSH compatible key from the box shown here and paste it into the import key box. Give your key a unique name that you can remember.

Now we see our key which we named "aws-demo" listed as a key pair. We will use this later when we create our cluster.

The next thing we need to setup is a VPC (Virtual Private Cloud). We will use a AWS wizard to do this. Start by choosing "VPC" from the access bar.

Click on "start VPC Wizard" to create our VPC.

Choose the first option on the right "VPC with a single Public Subnet" and click "Select"

Fill in the address range you would like. You should not use these values to avoid confusion with someone else that may be following this. For HPDD this is a shared account.

For this example I used "10.81.0.0/16" and named the VPC "aws-demo"

I also made my public subnet 10.81.10.0/24 and named this subnet "awsdemo-subnet".

When all the fields are filled in, click on "Create VPC".

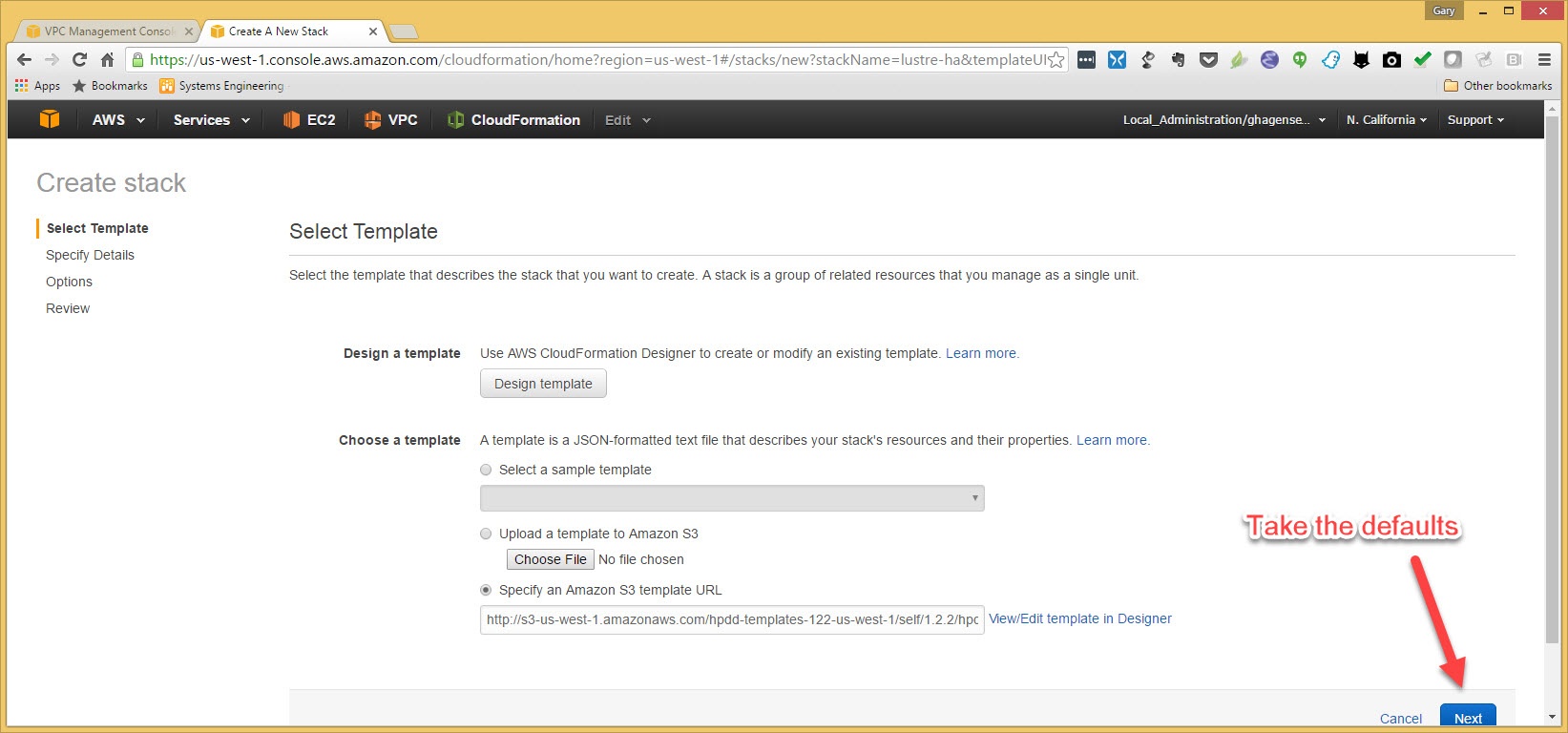

Now browse to "Cloud Edition for Lustre - Self Support" and on this page are all the stacks that are available. For this example we are using the California AWS domain.

Just take the defaults on the next screen and click "Next"

On the next page give your stack a unique name. aws-demo-lustre-ha is used for this example.

Also gie your filesystem a name. "demofs" is used for this example

Choose the key pair we made from the drop down list.

In the above screen there is other customization that you can make. If you want over-the-wire encryption (uses IPsec), then set the option "Enable Encryption" to "True".

You can pre-populate your cluster with data from an S3 bucket. Use the "ImportBucket" field to specify your bucket, "ImportDest" for where you want the data in the Lustre Filesystem and "importPrefix" to specify a starting point in your bucket.

The "MdsInstanceType" and" OssInstanceType" are used to change the type of storage that the MDS and all OSS servers will use.

The OSSCount is to change the default of 4 OSS servers to what you need.

"OssVolumeCount" and "OstVolumeSize" are to change the number and size of EBS volumes used for an OST.

In the screenshot below is the "WorkerCount" and "WorkerInstanceType" to specify the number of client nodes you would like created and the type of VM instance to use. You would not need these if you have your own clients configured separately that you want to connect to this filesystem.

To continue with this example in Choose the VPC we created in the "Vpcid" drop-down box.

Fill out the CIDR and choose the subnet we created when we created our VPC.

go to the next screen and click on "Next". No changes will be made on this screen.

check the box.. yes we know AWS is not free. And then click on "Create"

When the GUI transitions to the next page, you may need to refresh using the refresh symbol the upper right of the page.

But after a while you will see that your Lustre cluster is up and running. Look in the "Outputs" tab for the IP address to use to SSH to the NAT instance of your cluster.

You need to ssh using username "ec2-user".

You will also see a link that you can click that will open your browser to the dashboard for the cluster and the ganglia output for your cluster.

You can return to this page any time by clicking on "Cloud Formation" that we put on the access bar.

To access the servers, you will need to ssh to the NAT node with a pagent active and forwarding enabled and then ssh from the NAT node to the servers.

For windows, start "pagent" and add your private key. Then when you use putty, go to the ssh auth page under ssh and check the box to "allow agent forwarding". In Putty also remember to point to your private key in the auth section.

Once you are SSHed to the NAT node, you can ssh to the servers, again as "ec2-user". sudo will work from ec2-user.

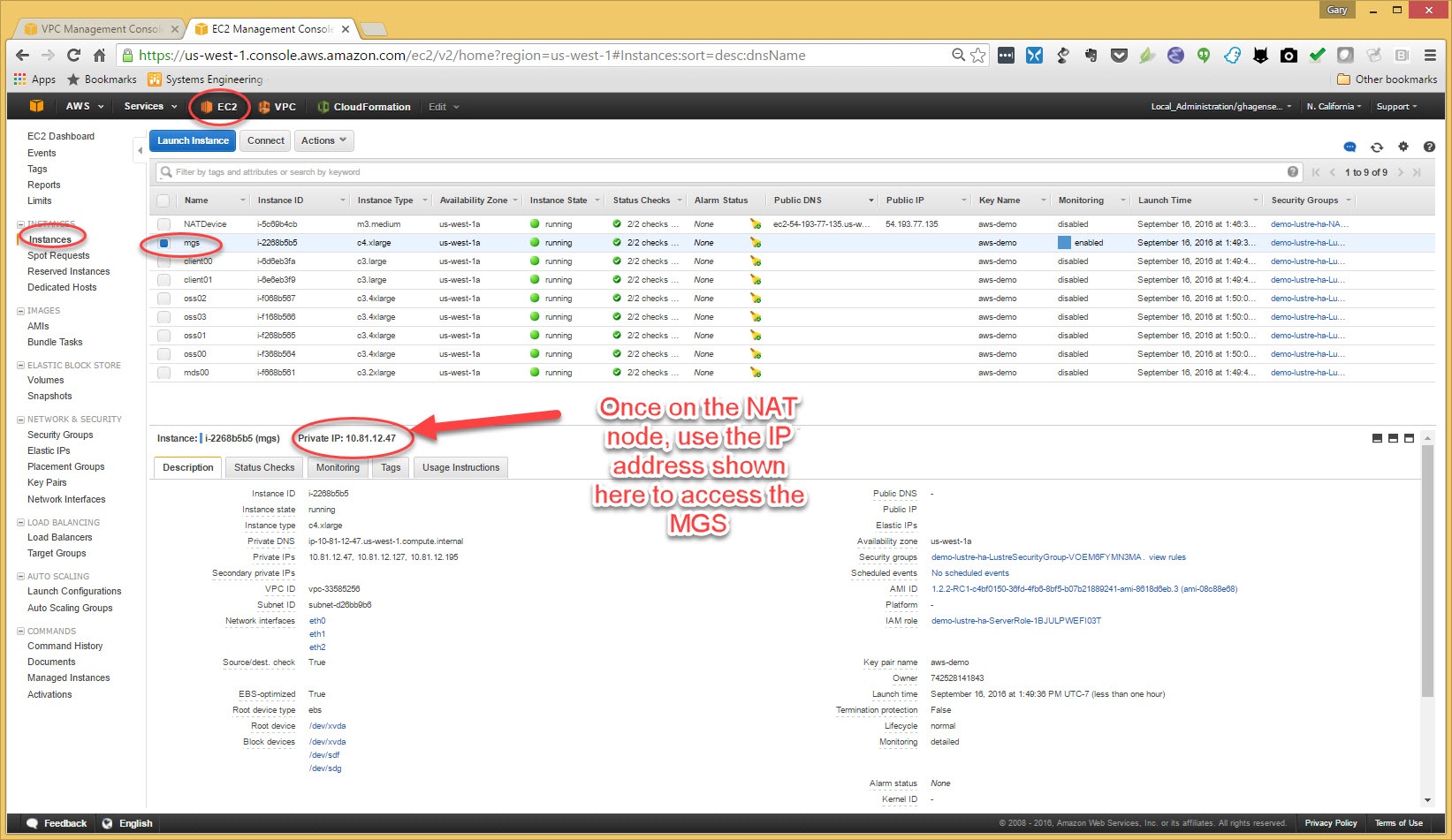

To get the IP addresses of the servers or clients that were created, click on "EC2" from the access bar and click on "instances" in the left hand pane and then select the server.

Here the MGS is shown. In the bottom pane the IP address of the MGS is shown at the top. You can also get IP addresses for the servers from the dashboard->cluster page.

You can get the IP address to access your clients, mount the filesystem and have some Lustre fun on AWS.

If you would like to mount your new Lustre Filesystem to, see Cloud Edition for Lustre software - Client Setup for instructions on using the ce-configure command to setup your clients for Lustre access.